Virtual Hosting – A Well Forgotten Enumeration Technique

I have been a pentester for several years and have gotten to see my fair share of other pentesters and consultants work. As with most people in the security community, I’ve learned a tremendous amount from others. This blog post was sparked by a gap I commonly see during network pentests, which is that the pentesters forget virtual host fuzzing after observing that many domains resolve to a single or couple of IP(s).

As an example, let’s say you were given the range 10.15.1.0/24 to test. In that range you find the following hosts are online:

10.15.1.1

10.15.1.2

10.15.1.4

10.15.1.50

10.15.1.211

At this point you should be running a reverse DNS lookup on each of those IPs to see what domain names are correlated to an IP. You may also perform certificate scraping on TLS enabled ports to grab a few more domain names. Let’s say you gather that data and come back with the following:

10.15.1.1:router.wya.pl

10.15.1.2:win7.wya.pl

10.15.1.4:server.wya.pl

10.15.1.50:app1.wya.pl,app2.wya.pl,app3.wya.pl,*.wya.pl

10.15.1.211:device.wya.pl

The 10.15.1.50 host has 3 domains associated with it along with a wildcard certificate. This doesn’t guarantee there are additional host names that the server will respond to, but it might indicate there are subdomains with that suffix. Normally you’d want to take all these domain names and run them through Aquatone or maybe httpx to see if there is a difference in response.

To continue with the example, let’s say you noticed that https://app1.wya.pl looked very different than https://app2.wya.pl.

How does that work? Aren’t they on the same IP address?

Virtual hosting is a concept that allows individual servers to differentiate between different hostnames. This means that a single IP could respond to many domain names and serve different content depending on what was requested. This can be used in Apache, Nginx, load balancers, and more.

Essentially the server administrator configures a default route for unknown hostnames along with the primary one. They would then configure additional routes for other hostnames and serve that content when requested properly. The DNS server would typically be configured with entries for all hostnames the server accepts corresponding to the server’s IP.

In practice what does this look like? When everything is set up properly, as a user on the example network I should be able to go to https://app1.wya.pl and https://app2.wya.pl in a browser. The DNS lookup would succeed and resolve to 10.15.1.50. The browser would send the HTTPS request with the Host header set to app1.wya.pl or app2.wya.pl. The server would respond with the content for the requested hostname.

Cool, so why is this a big deal? Why do pentesters miss this?

The answer is always DNS.

In large organizations there are many teams that come together to architect a network and deploy applications. Rarely does any individual own the whole process required to get a domain name and certificate, manage the deployment server, deploy the code, and serve the content.

Often times a wildcard certificate will be deployed to a server to allow for dynamic sets of applications. The server owner doesn’t need to reconfigure the server every time a new app is created or an old one is removed. The server will attempt to route any HTTP request to the requested hostname. If it can’t find the server, then it will return the default route.

Network admins will choose most of the time to not publish the DNS records for internal applications to their external DNS server. Internal apps can resolve the servers they need to talk to fine. In most cases it’s possible for an externally facing server to be able to communicate with internal services.

Real World Example

So far I’ve done a lot of talking about what virtual hosts are. My hope is that through a real example you will see why this is important to do and why it can pay off. For this example, I’ll demonstrate virtual host enumeration on Ford, which has a VDP listed here: https://hackerone.com/ford. I’ll note that this is not a vulnerability in itself, but a technique that can be used to find additional hosts that may have not yet been tested.

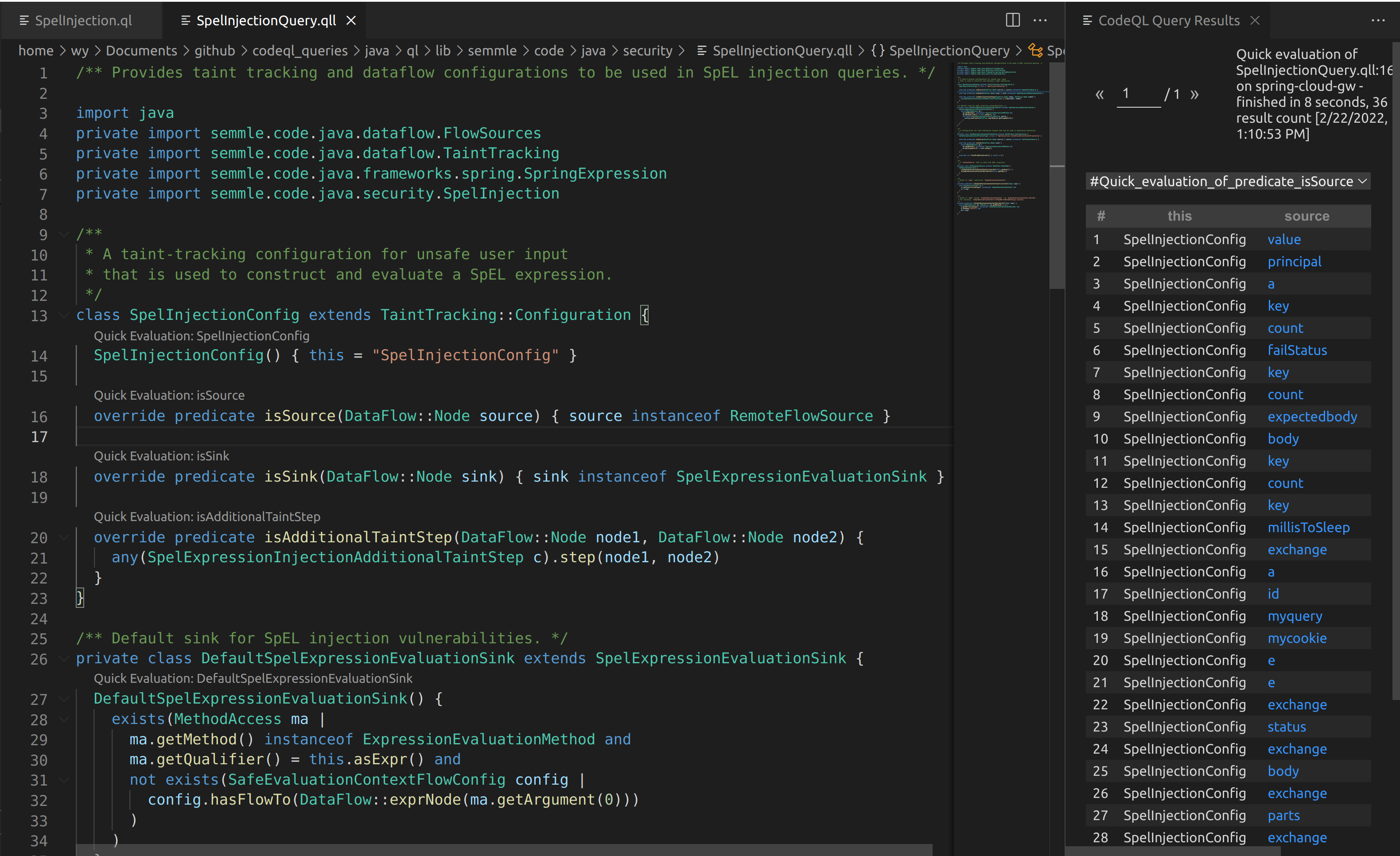

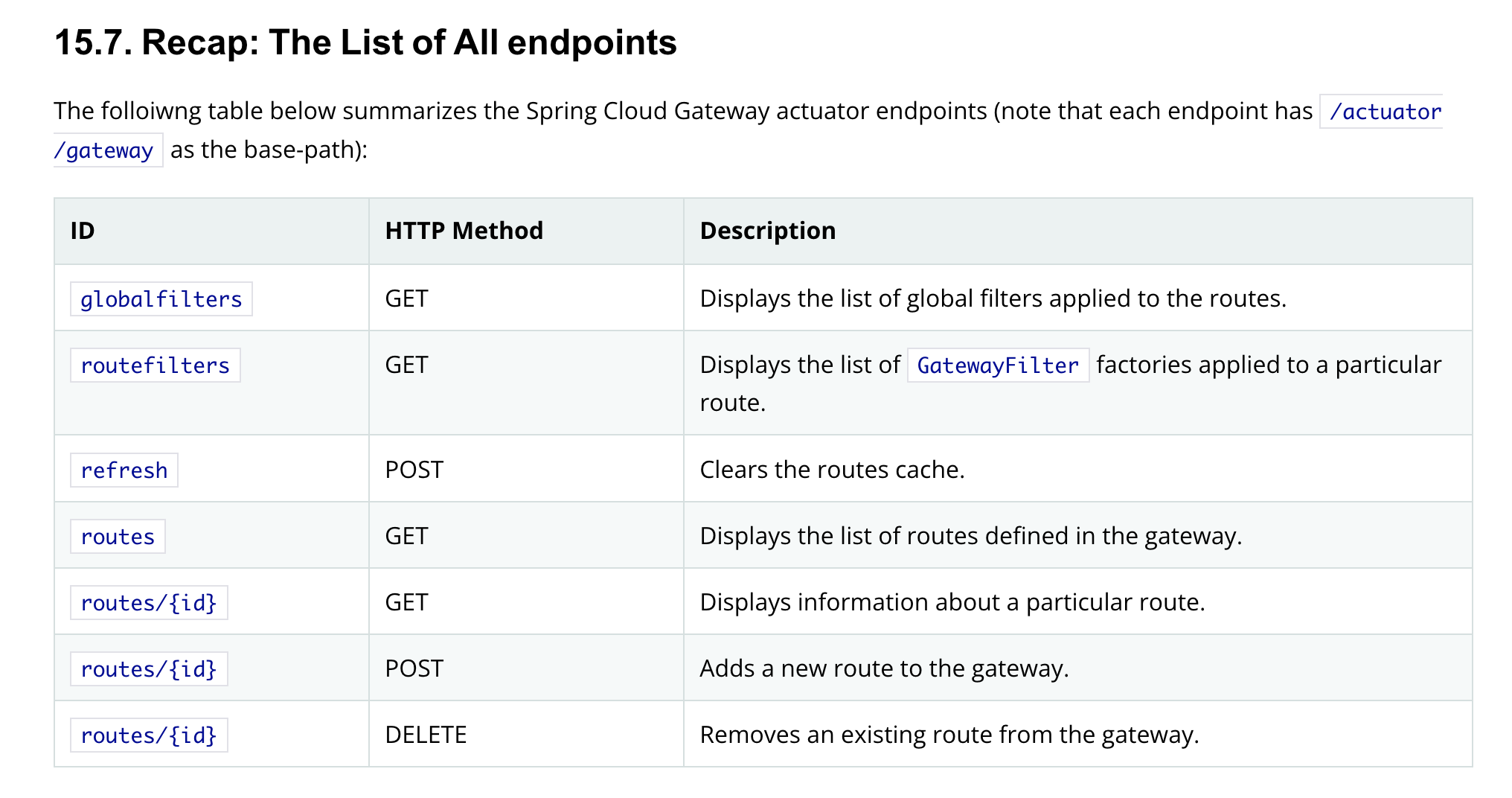

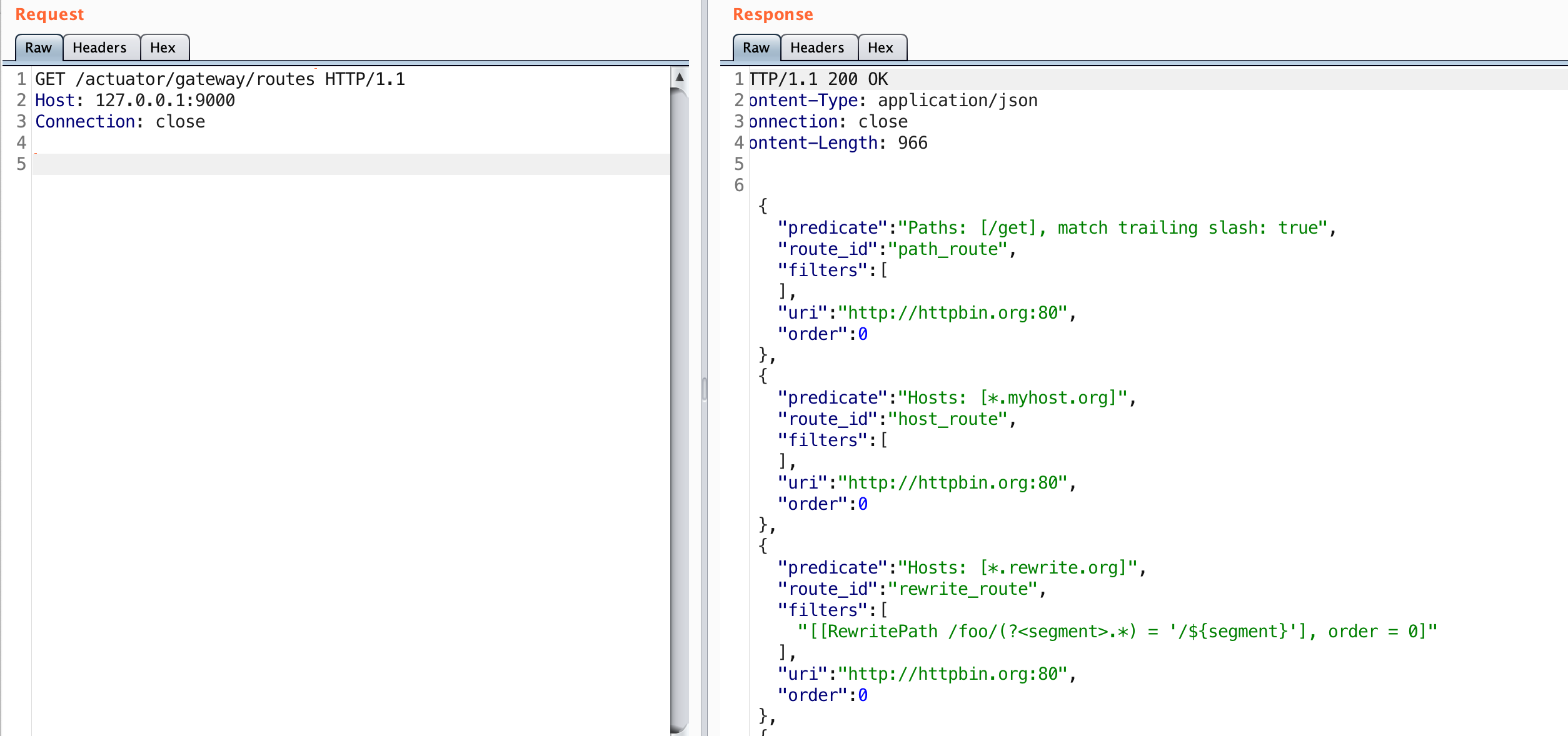

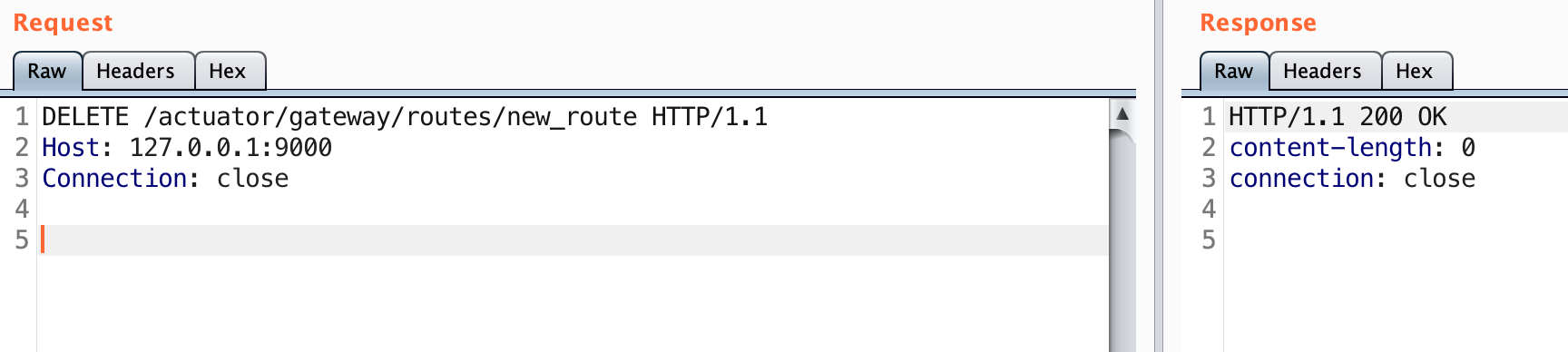

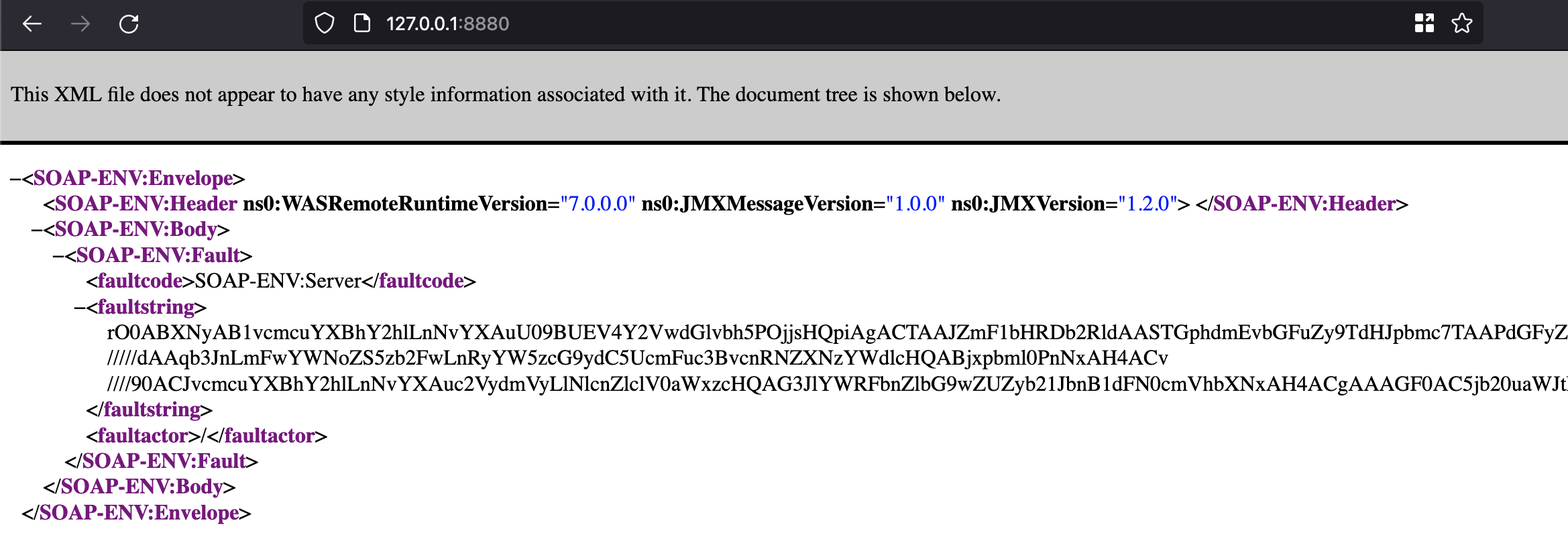

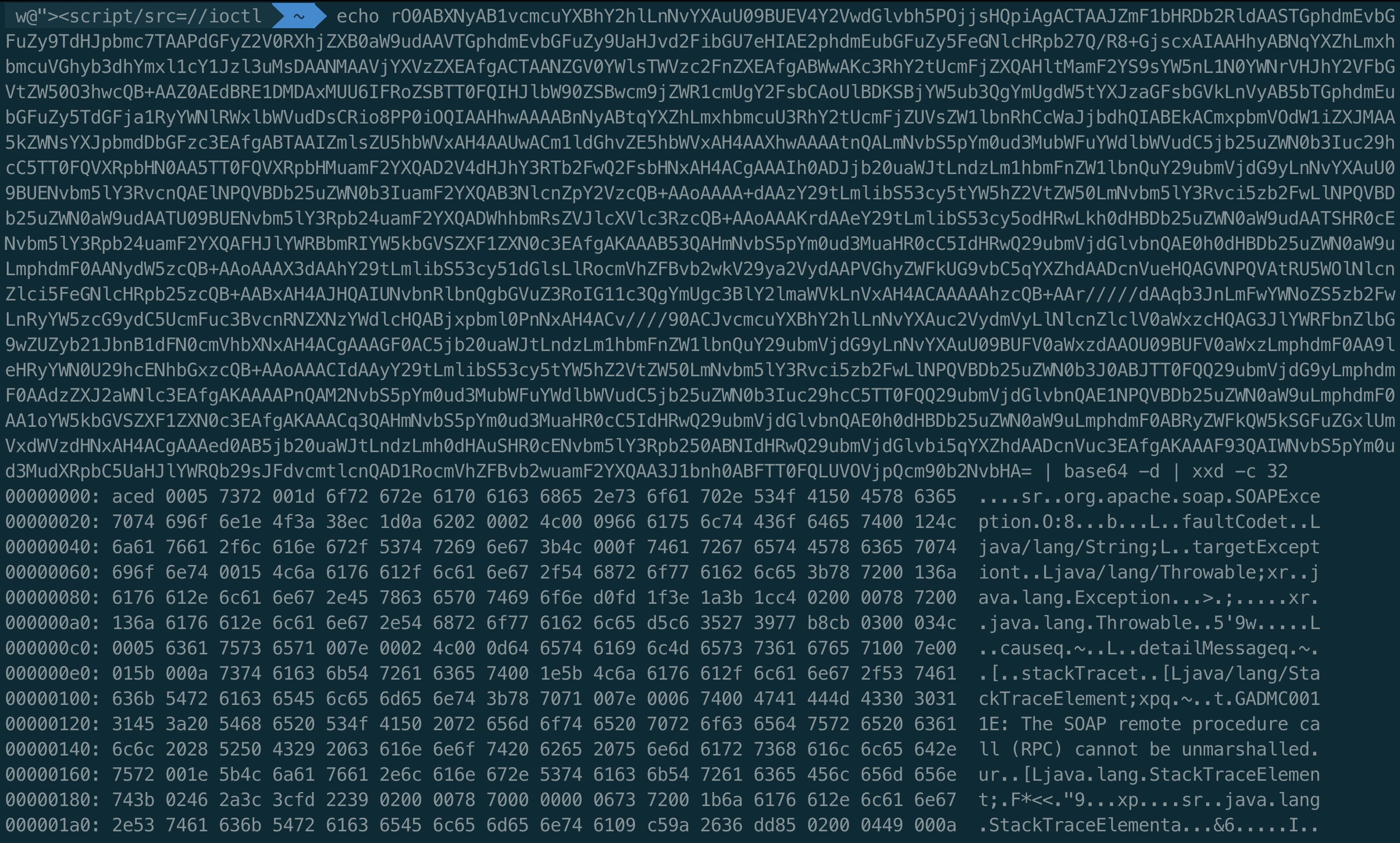

Pivotal Cloud Foundry (PCF) (now part of Tanzu) is a common way to deploy apps at large organizations. The route handler used by PCF is the gorouter. PCF can be a high reward target for virtual host enumeration because of the way gorouter works.

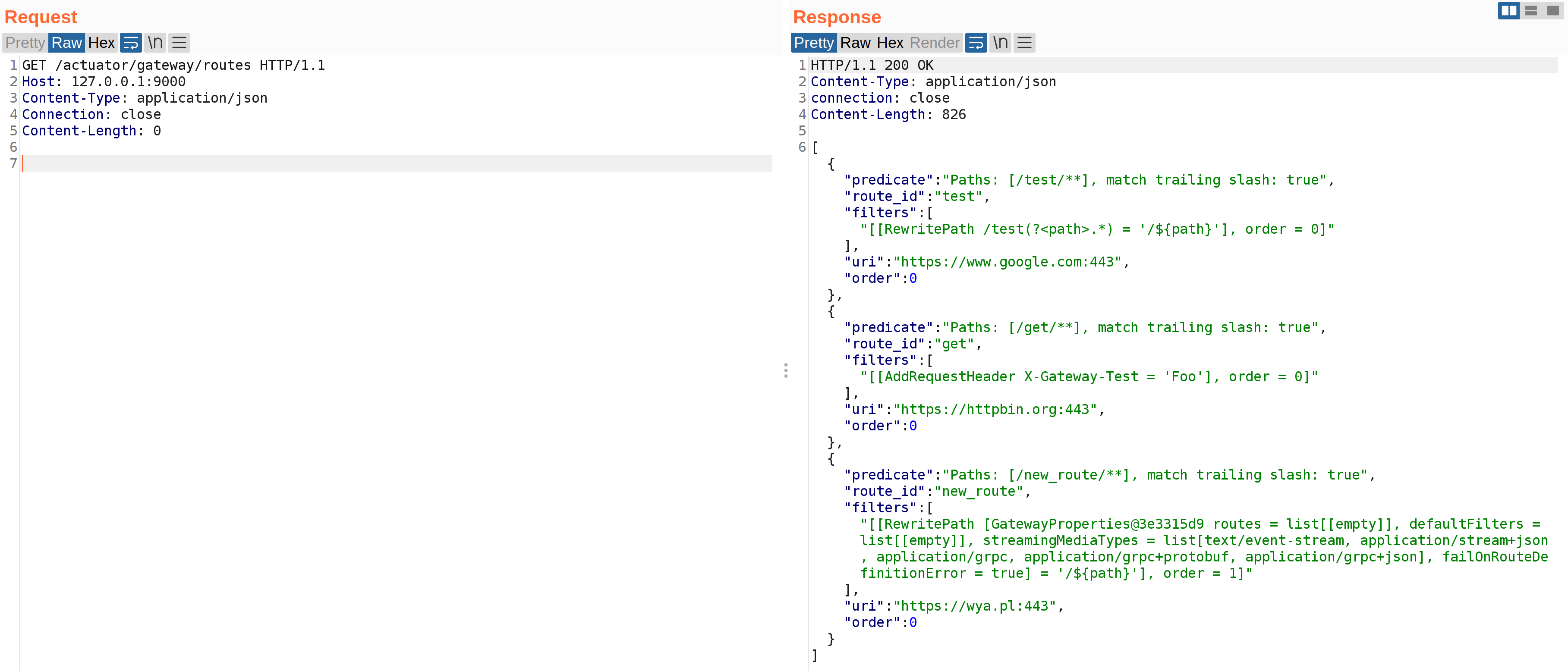

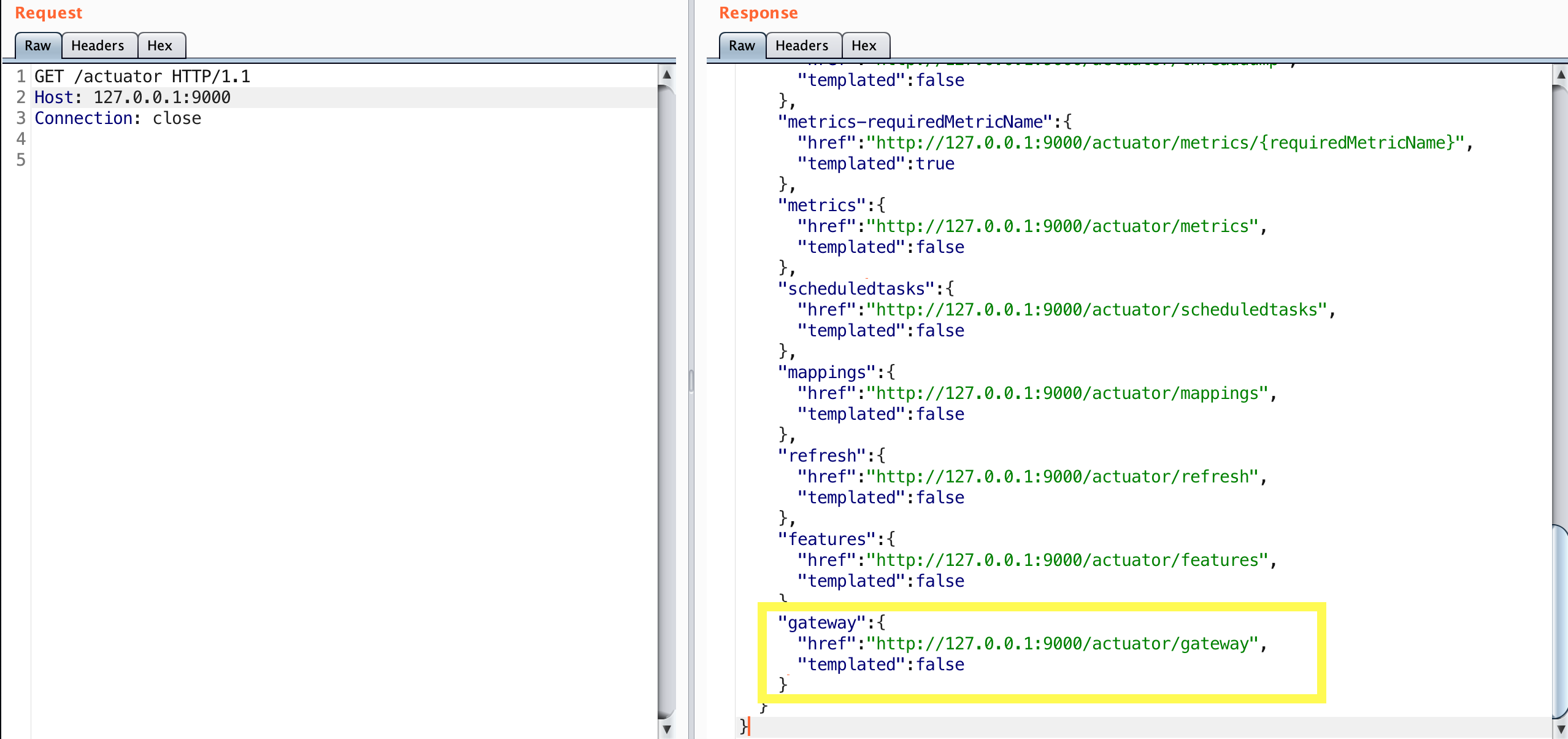

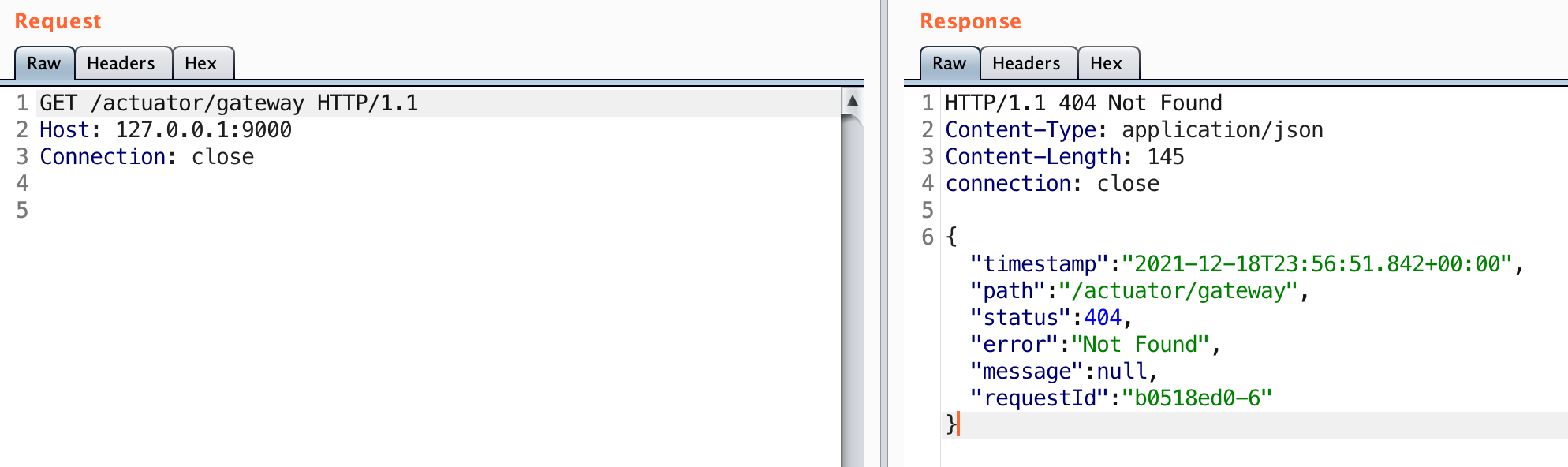

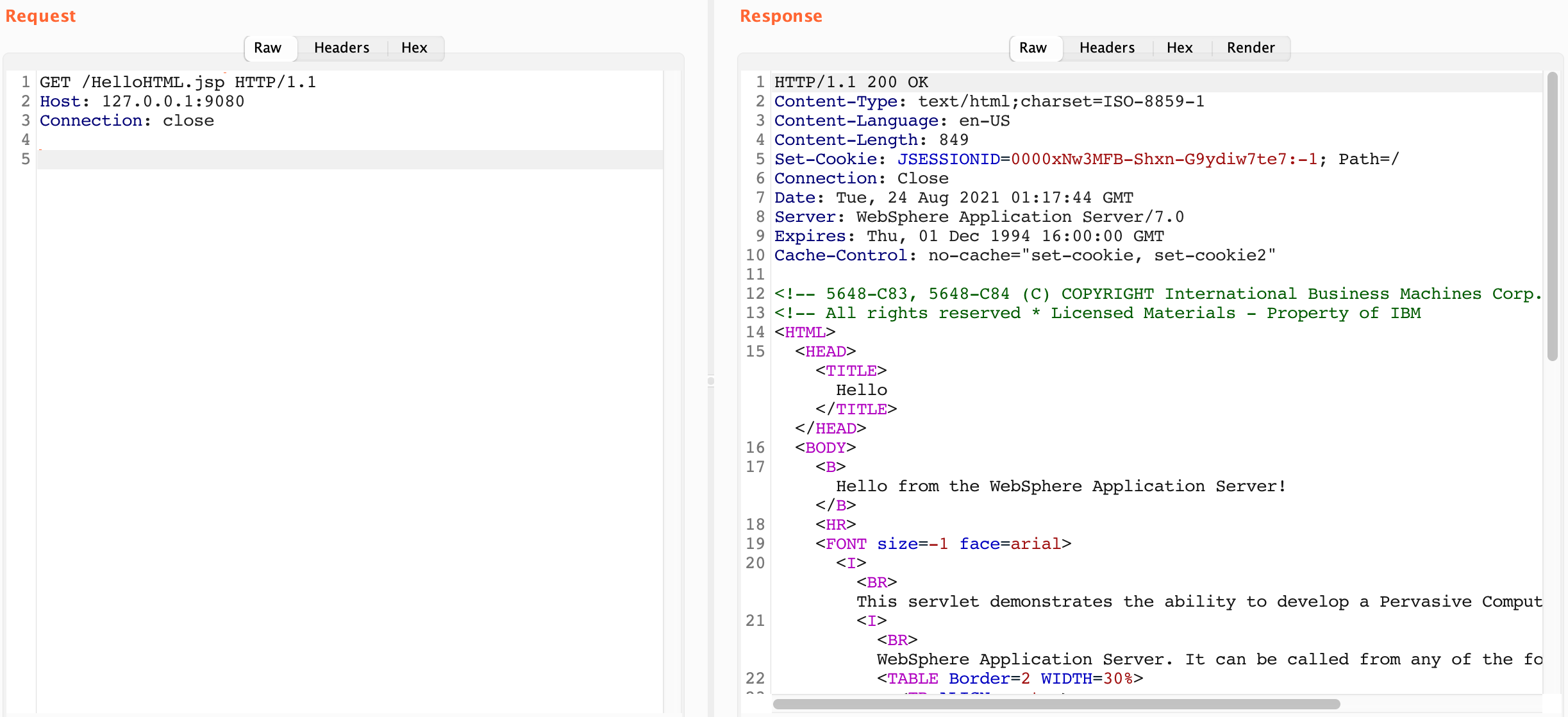

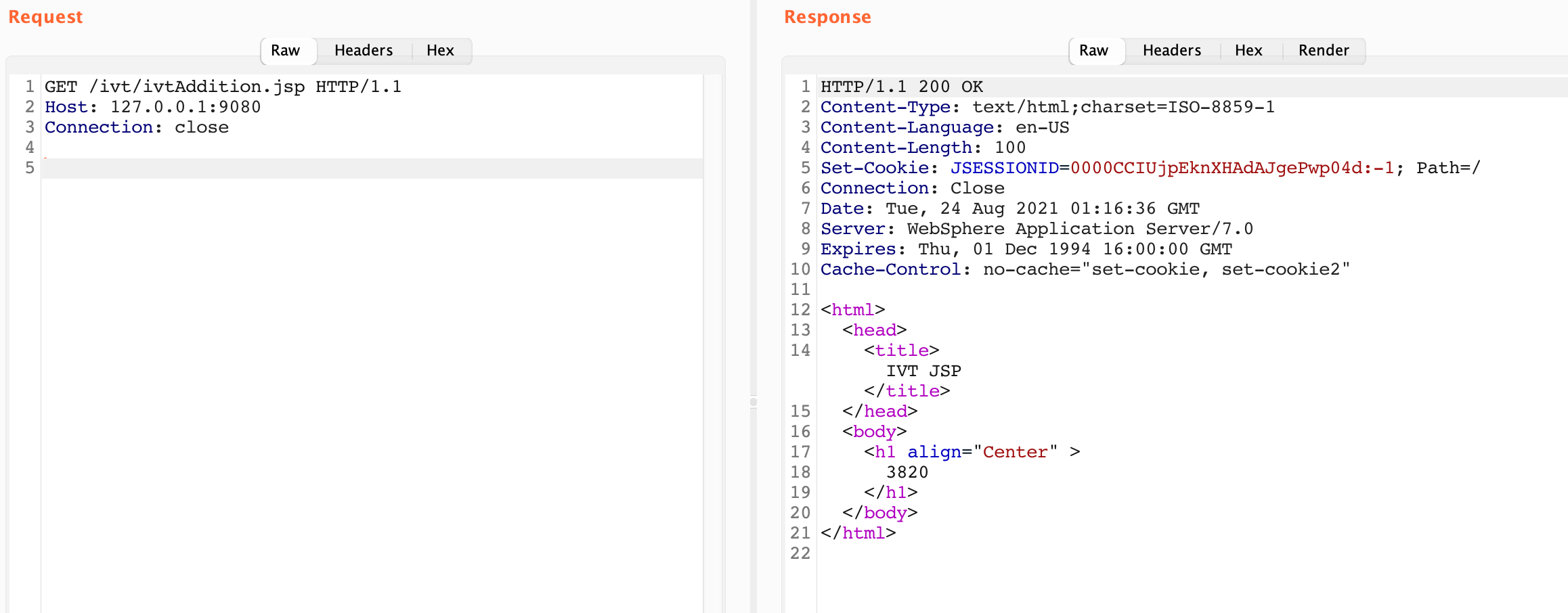

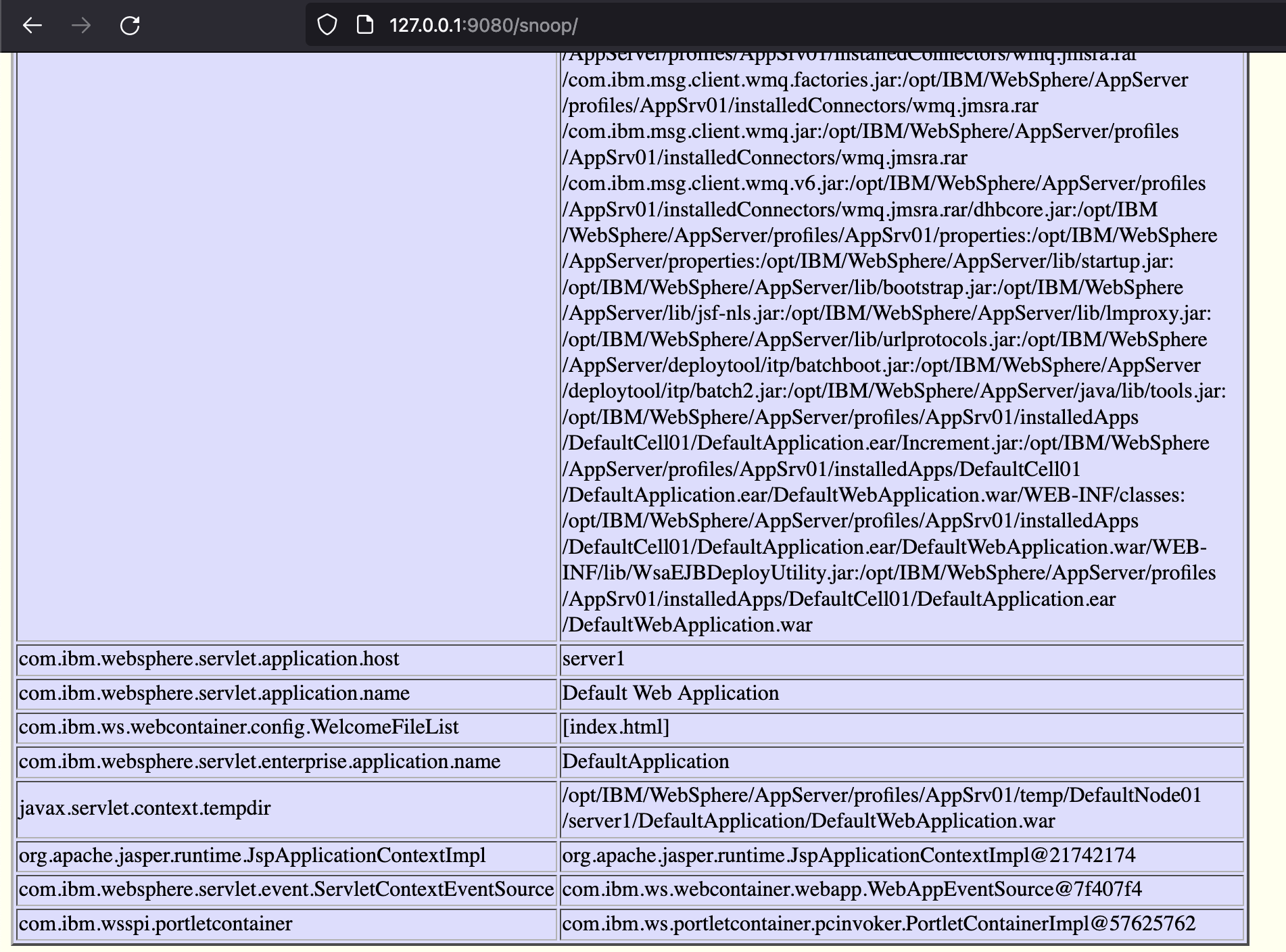

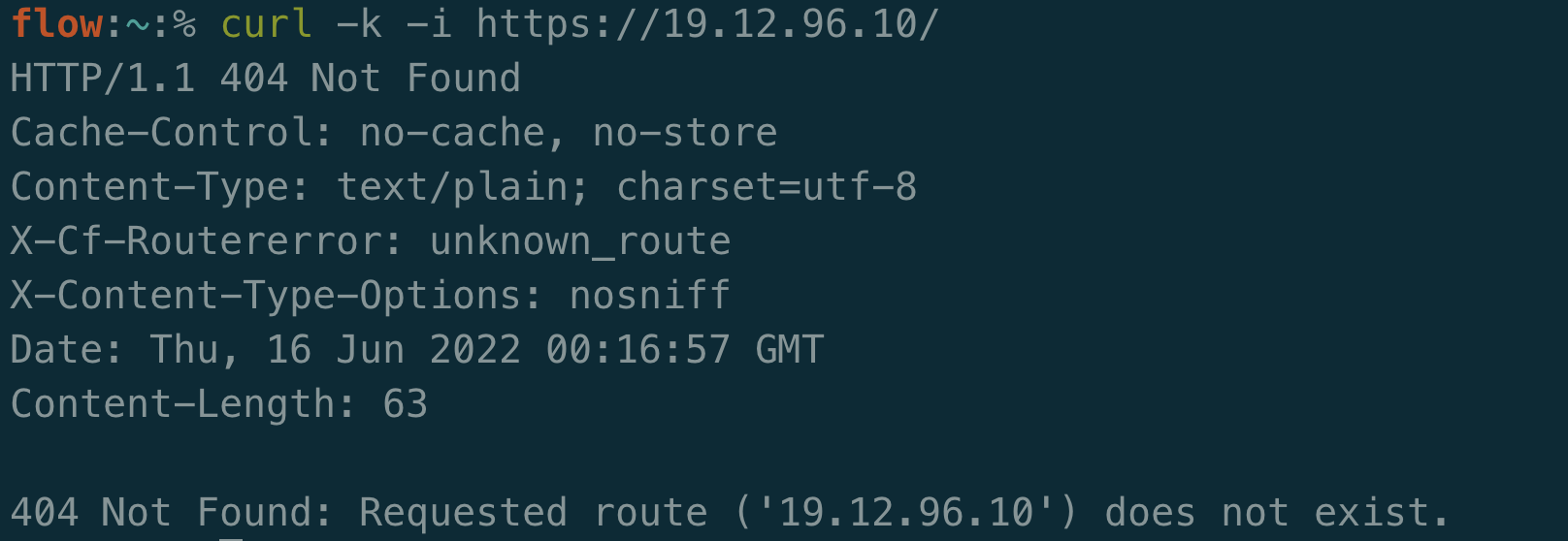

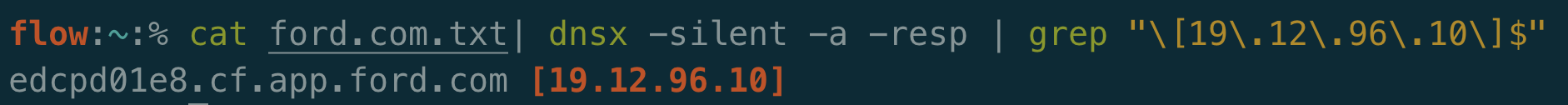

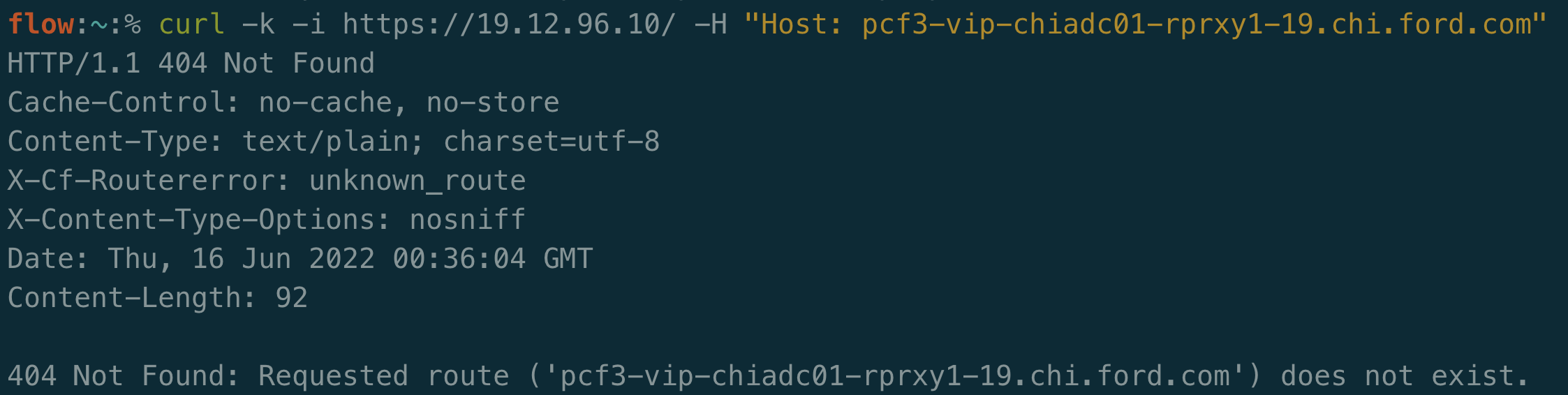

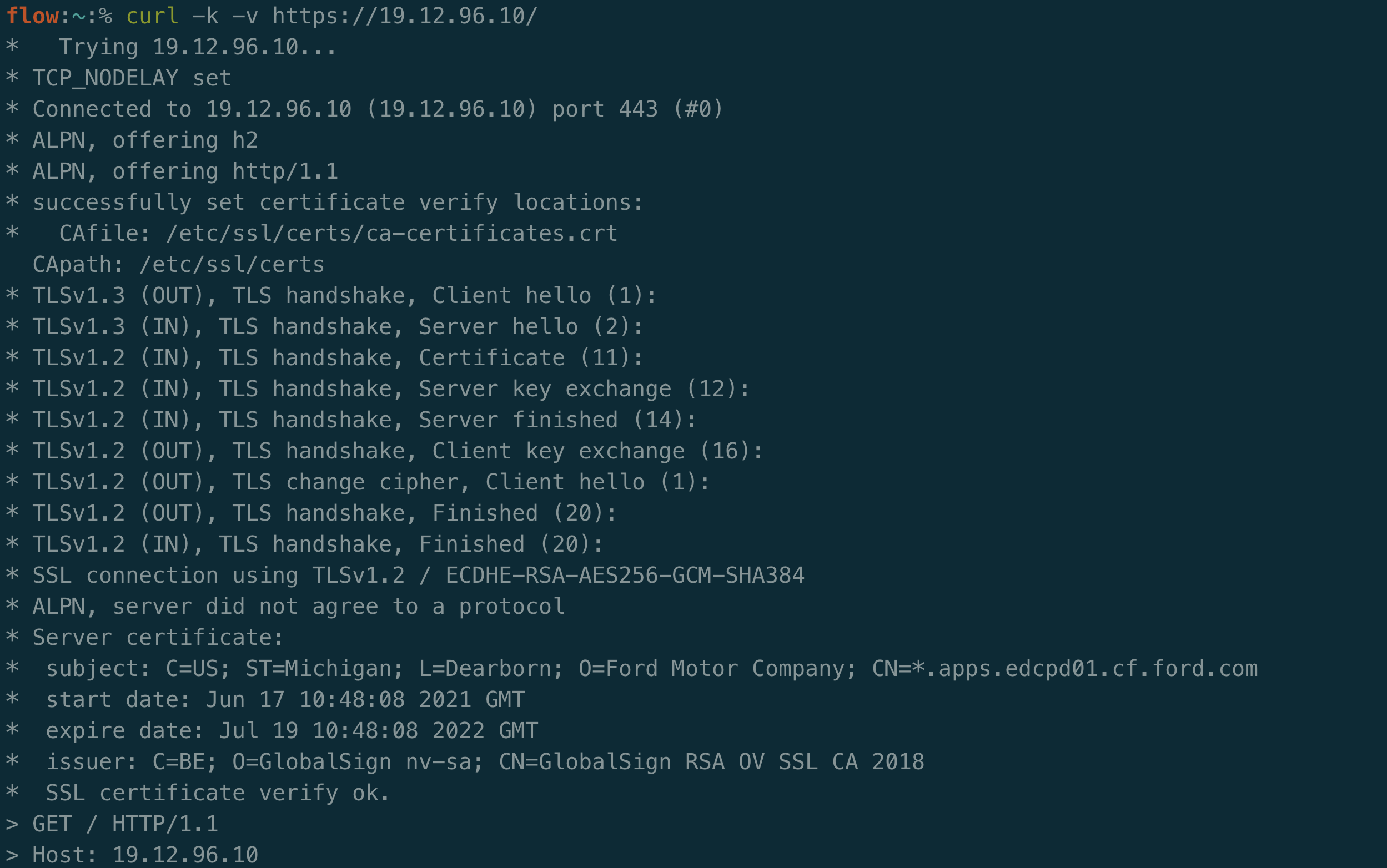

Within Ford’s ASN exists the IP 19.12.96.10. If you had sent an HTTPS request to this IP, you’d see the following:

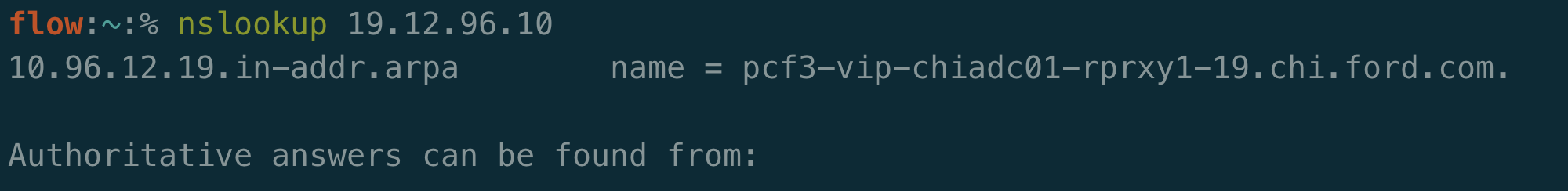

PCF has several clear indicators, such as the “404 Not Found: Requested route (‘…’) does not exist.” and the X-Cf-Routererror header. If you happen to do an nslookup on this IP you can see that it is indeed associated with Ford:

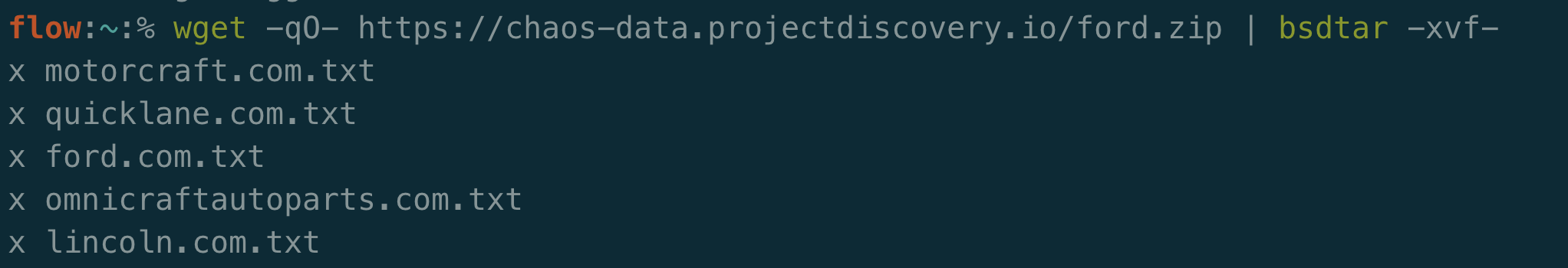

Let’s say you have a list of domains related to this company. In an internal pentest, you may have access to this data or you can scrape a source version control service like GitHub for domains. The Chaos dataset from ProjectDiscovery is a great starting point for public programs:

I’ll go ahead and throw all ford.com domains through dnsx to see what resolves to this IP we are testing.

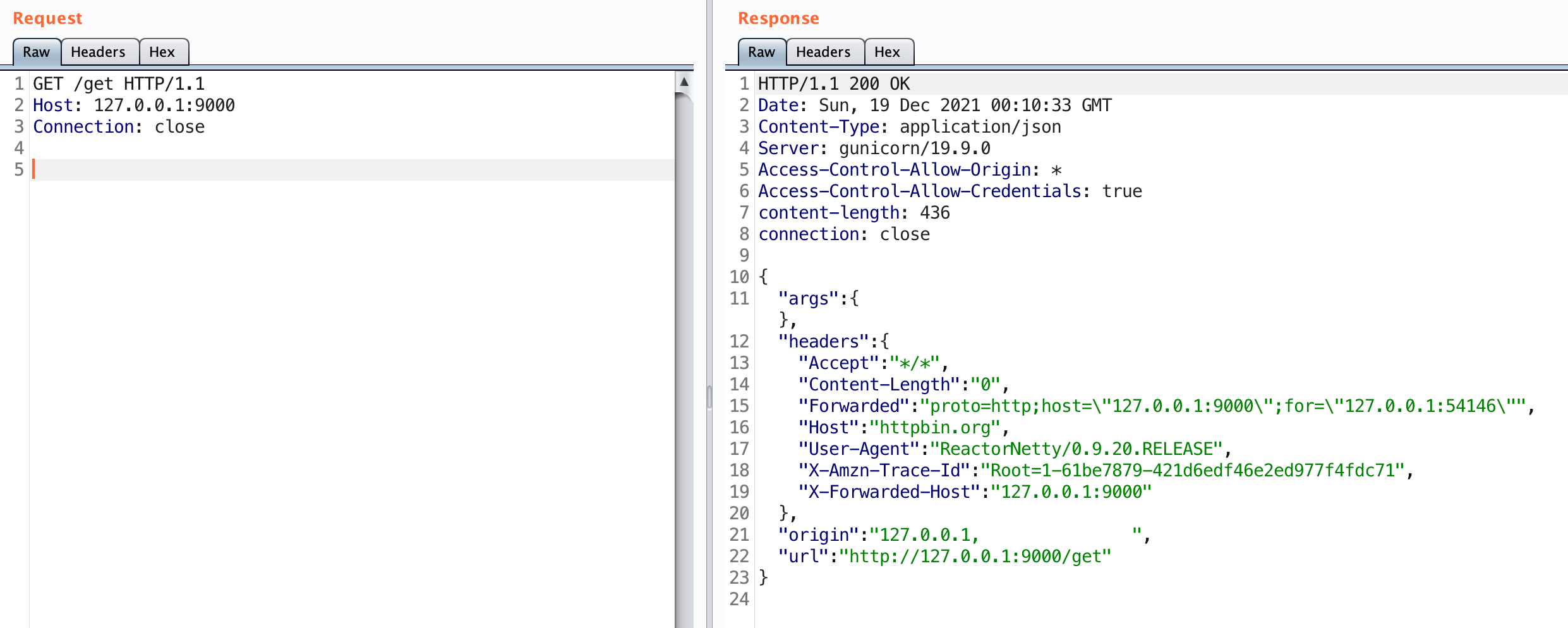

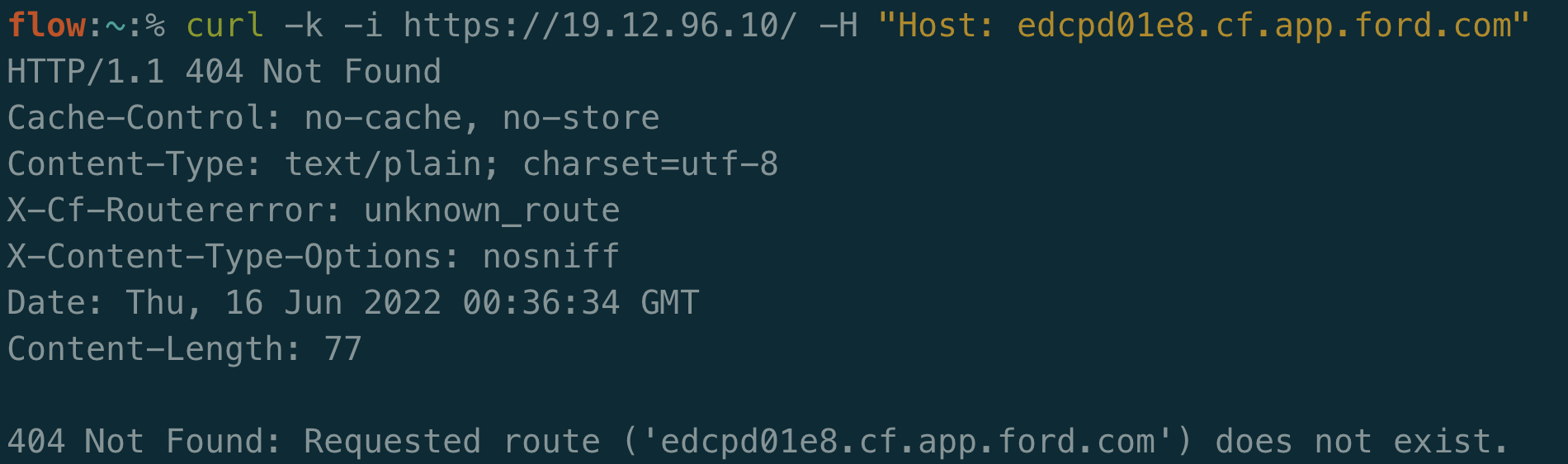

Only a single result! Isn’t that a bit weird? We saw earlier that an nslookup resolved pcf3-vip-chiadc01-rprxy1-19.chi.ford.com to that IP as well. Let’s see what results we get if we send an HTTPS request to that IP with each hostname:

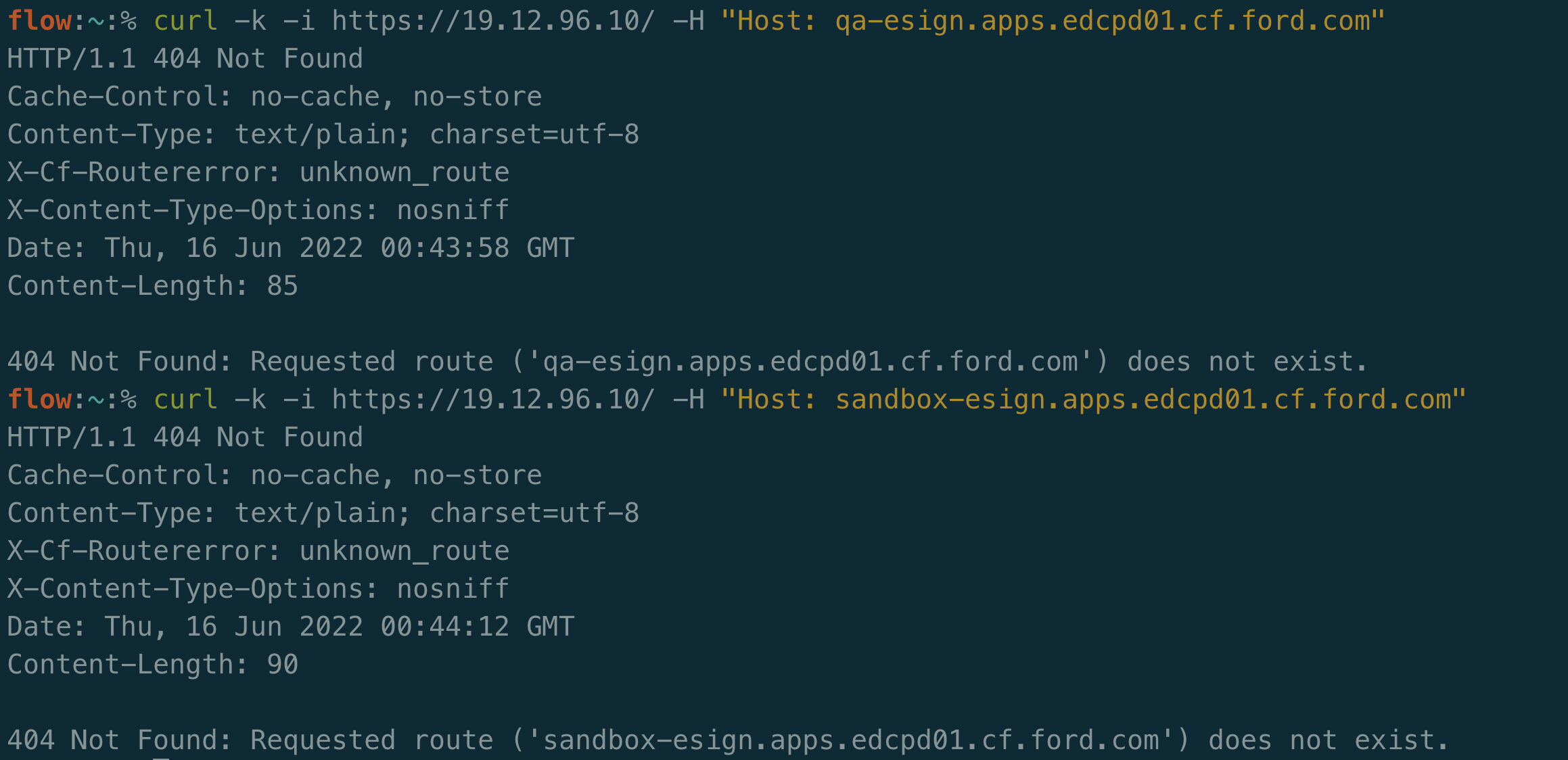

Nothing on both domains! Bummer.

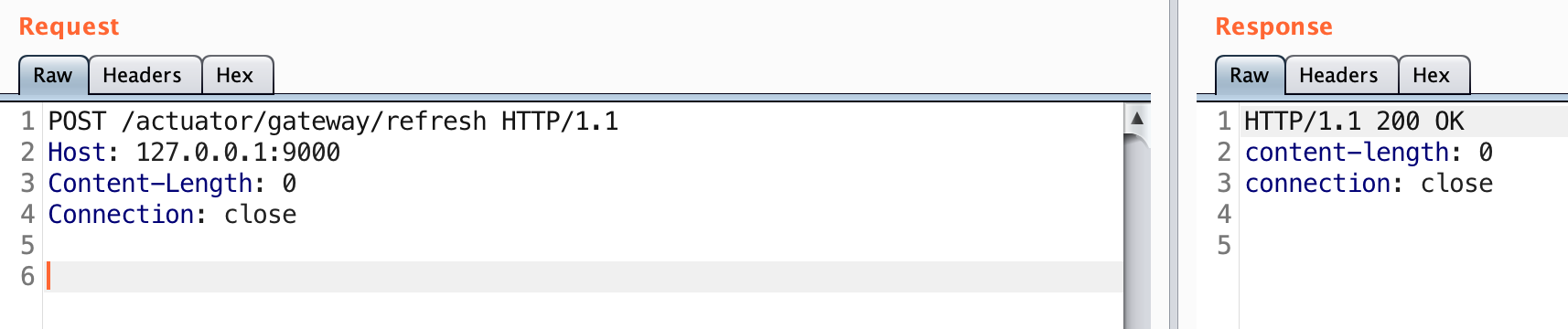

Unfortunately our DNS results were a bust. Maybe certificate scraping will work. If I switch to the -v flag in curl for verbosity, I can see the certificates (I’ll note there are plenty of tools to automatically do this).

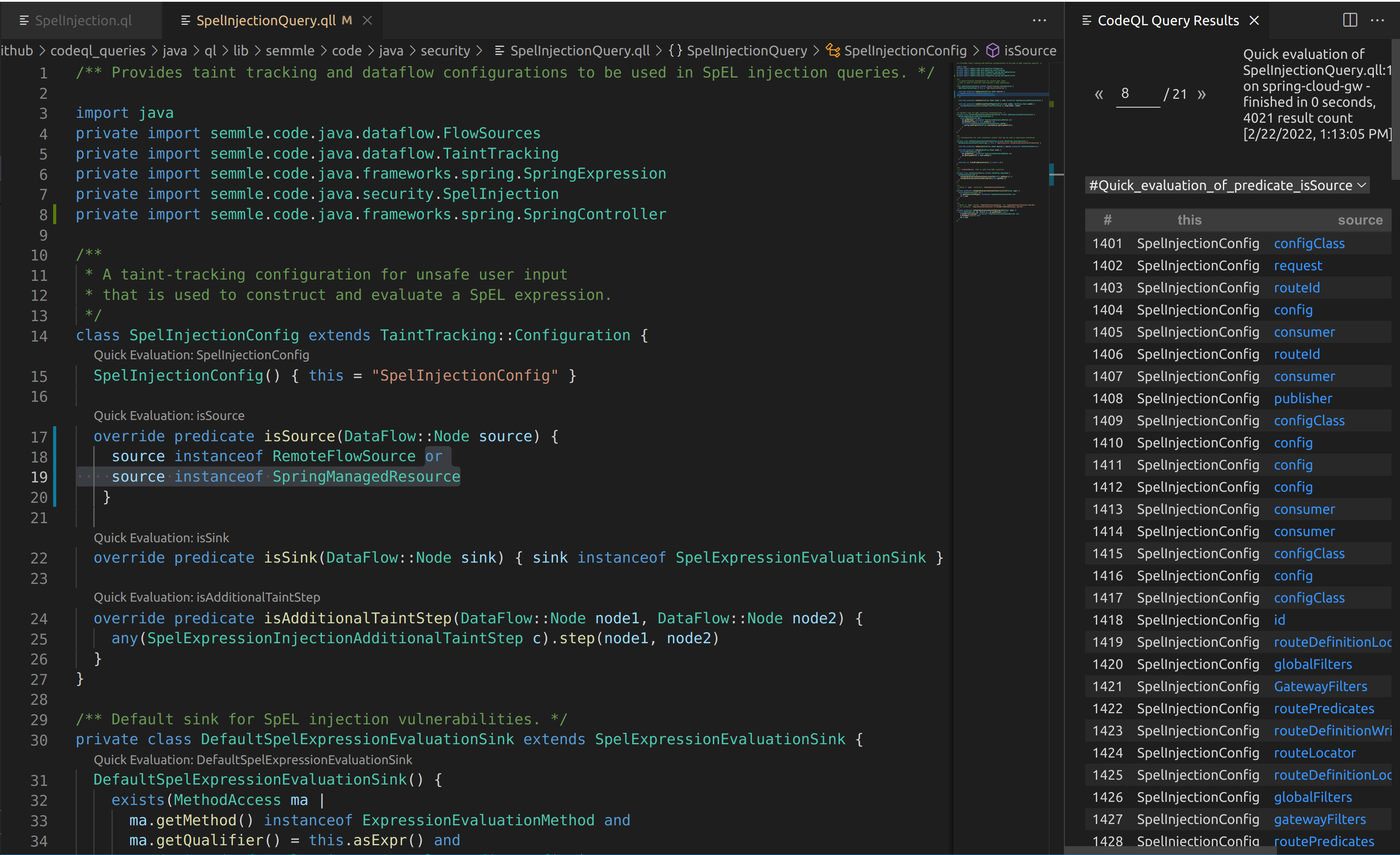

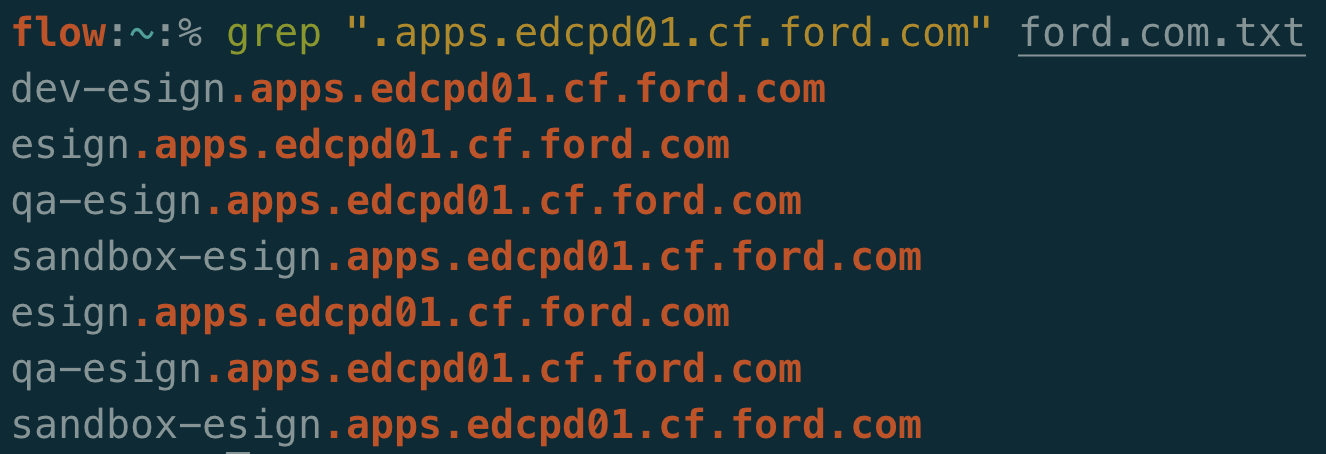

In the subject line, the CN of *.apps.edcpd01.cf.ford.com can be seen. A wildcard is interesting. Let’s see if any domains with that suffix exist in the Ford dataset from ProjectDiscovery:

Unfortunately running through the same exercise doesn’t give us any different results:

At this point, I’ve seen a lot of pentesters move on.

Let’s have some creativity here. We weren’t able to find any additional websites through DNS or certificate scraping. What else can we try?

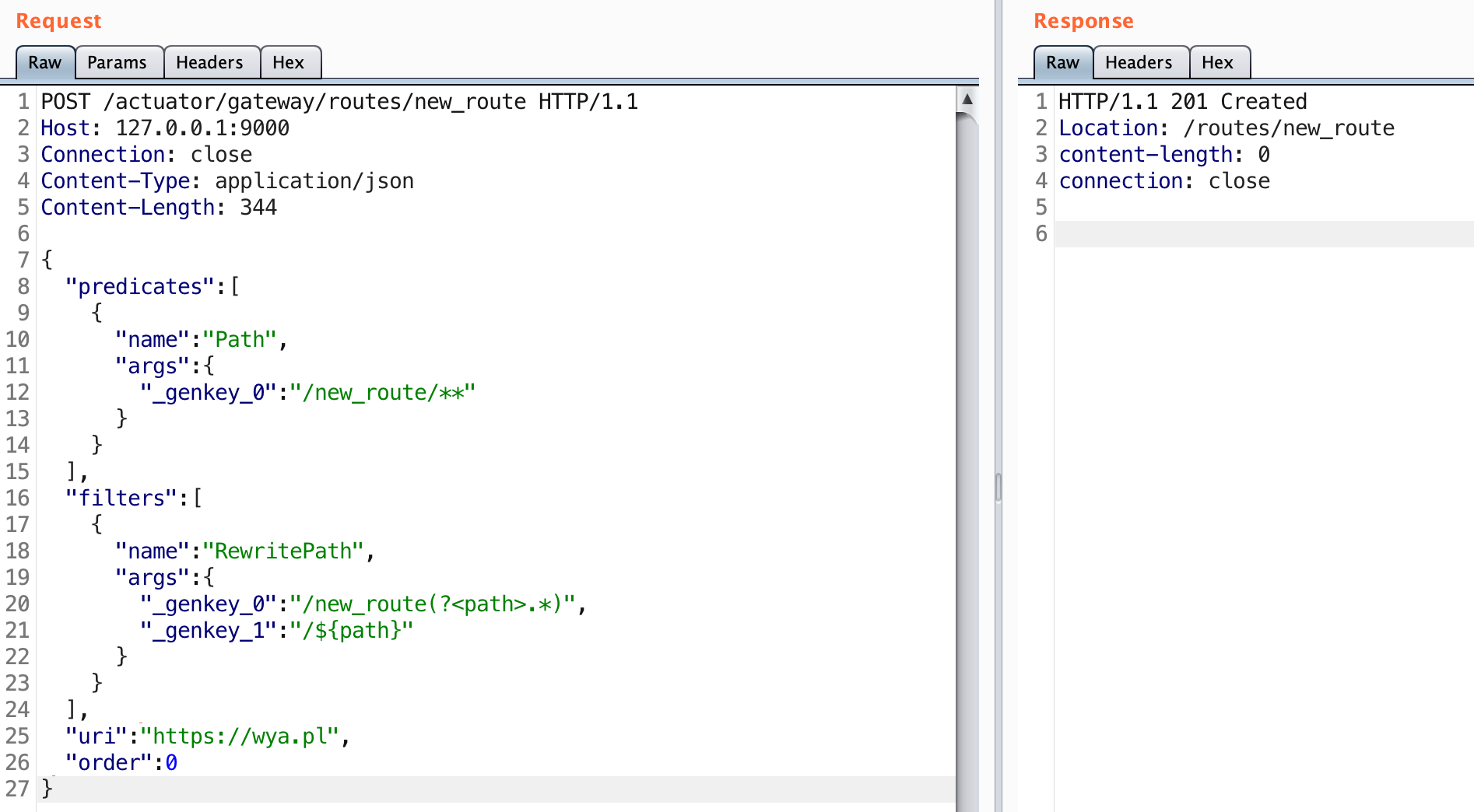

We have a large list of domain names related to the company. That could be a good start. Another trick could be to mangle the subdomains and test out various permutations. Ripgen is a good example of what this would look like and I encourage you to try it out. SecLists also has a nice set of subdomain wordlists that you could prefix to the target company’s domain (or even the wildcard suffix).

Once you are ready to give it another go, you can test your list of domains out against the IP to see if there is any significant variation in response. If there is, you may have found a virtual host.

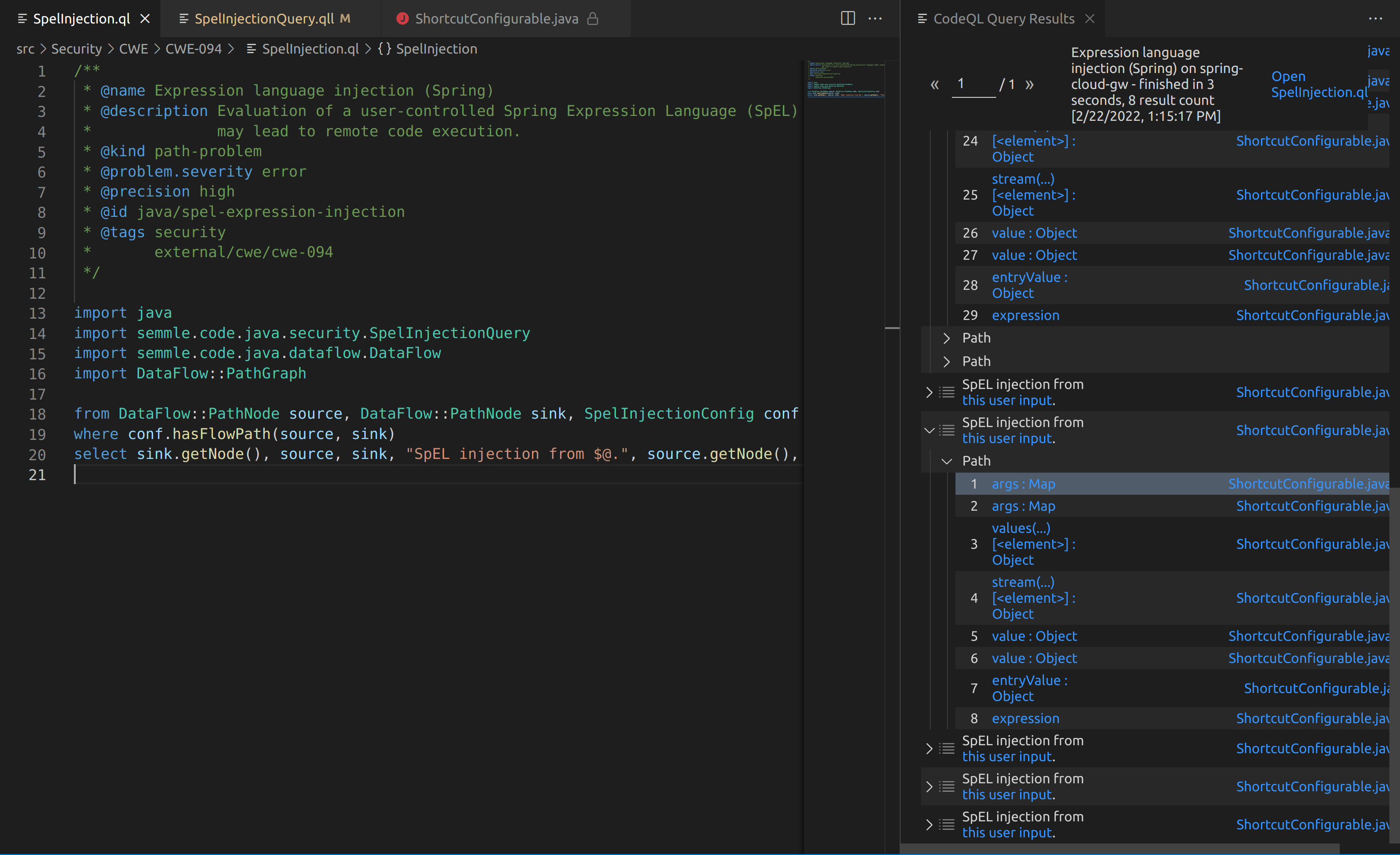

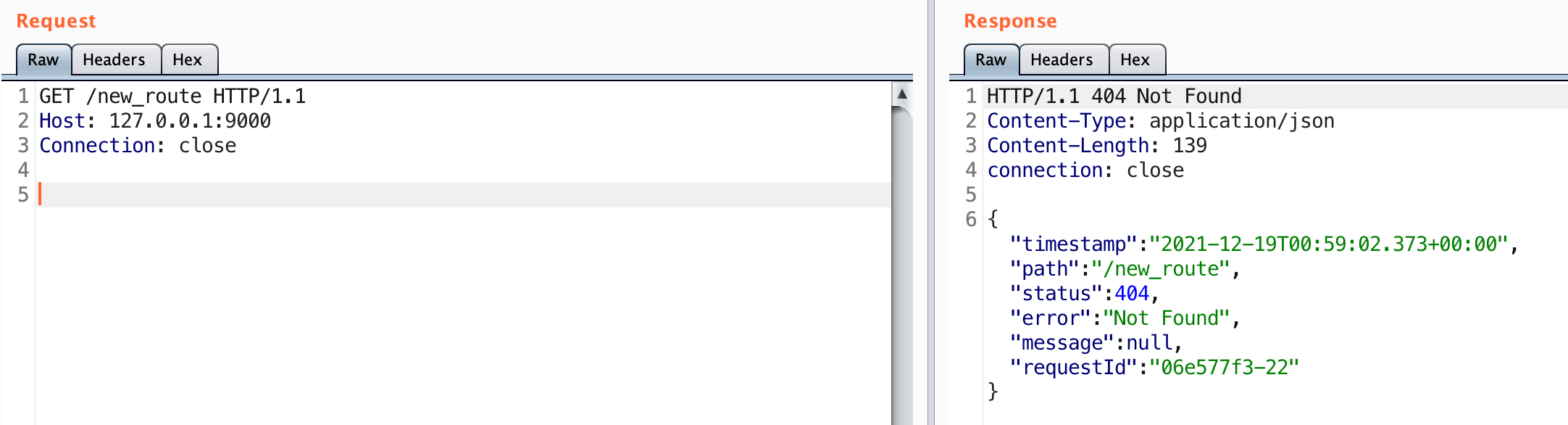

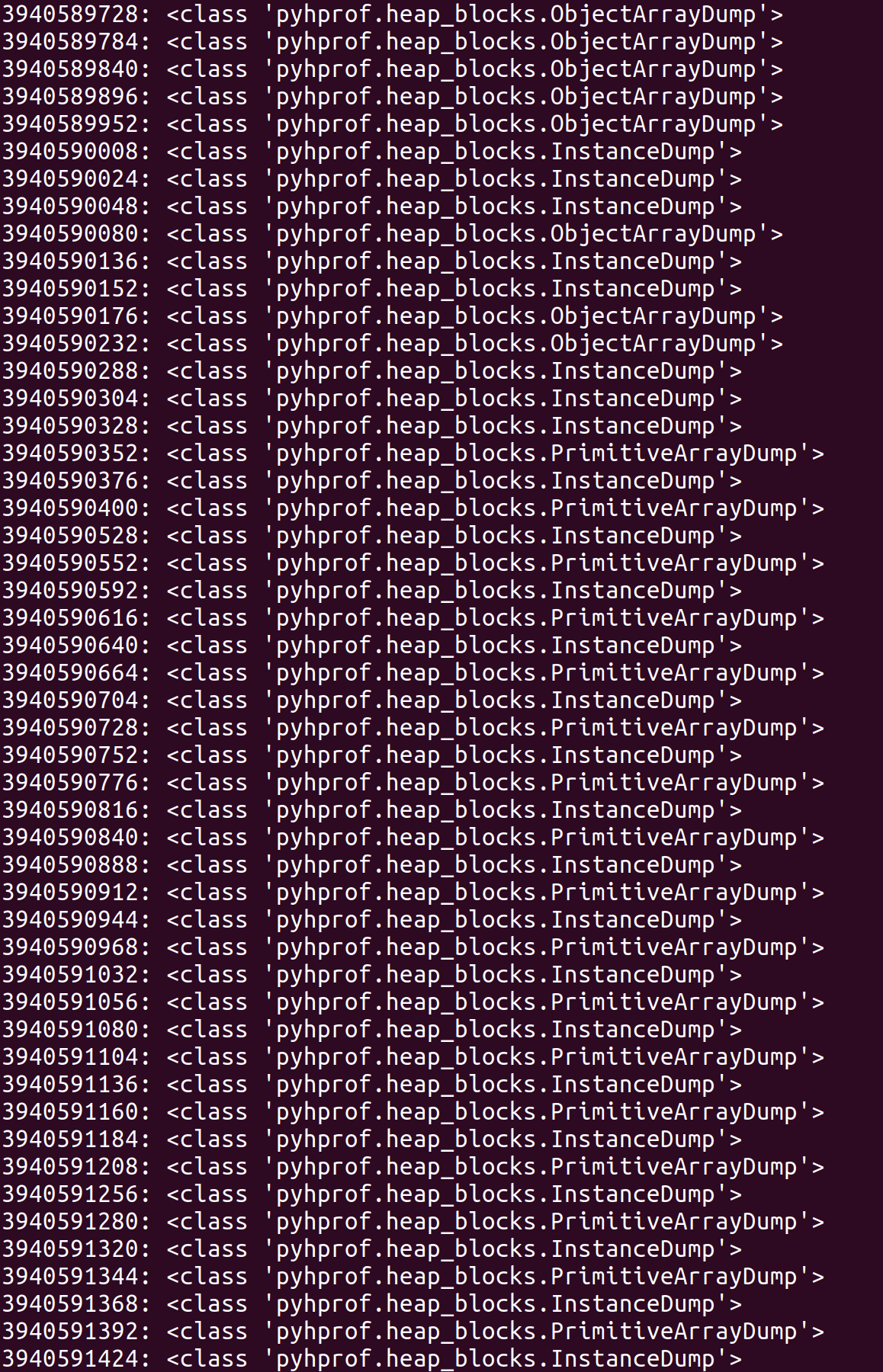

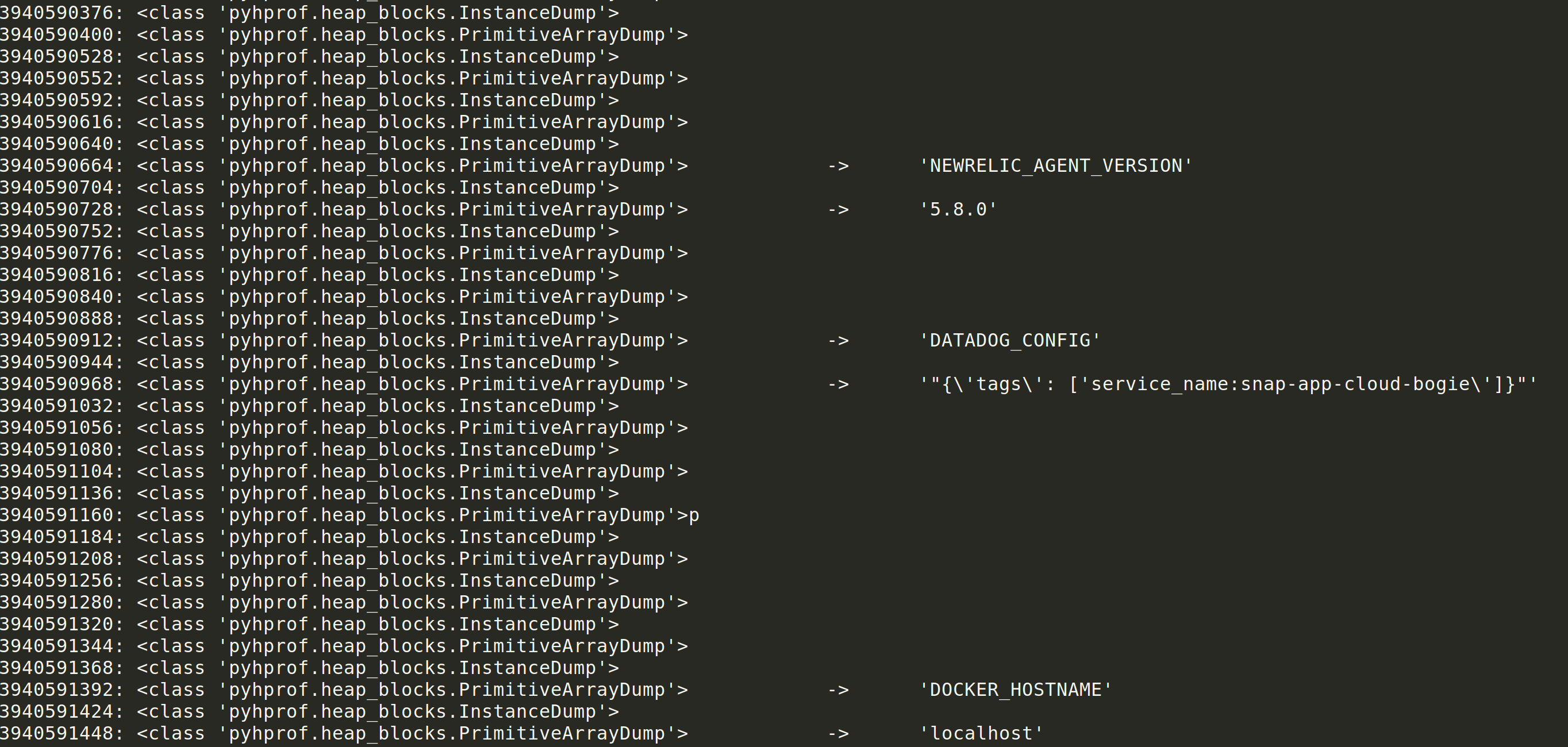

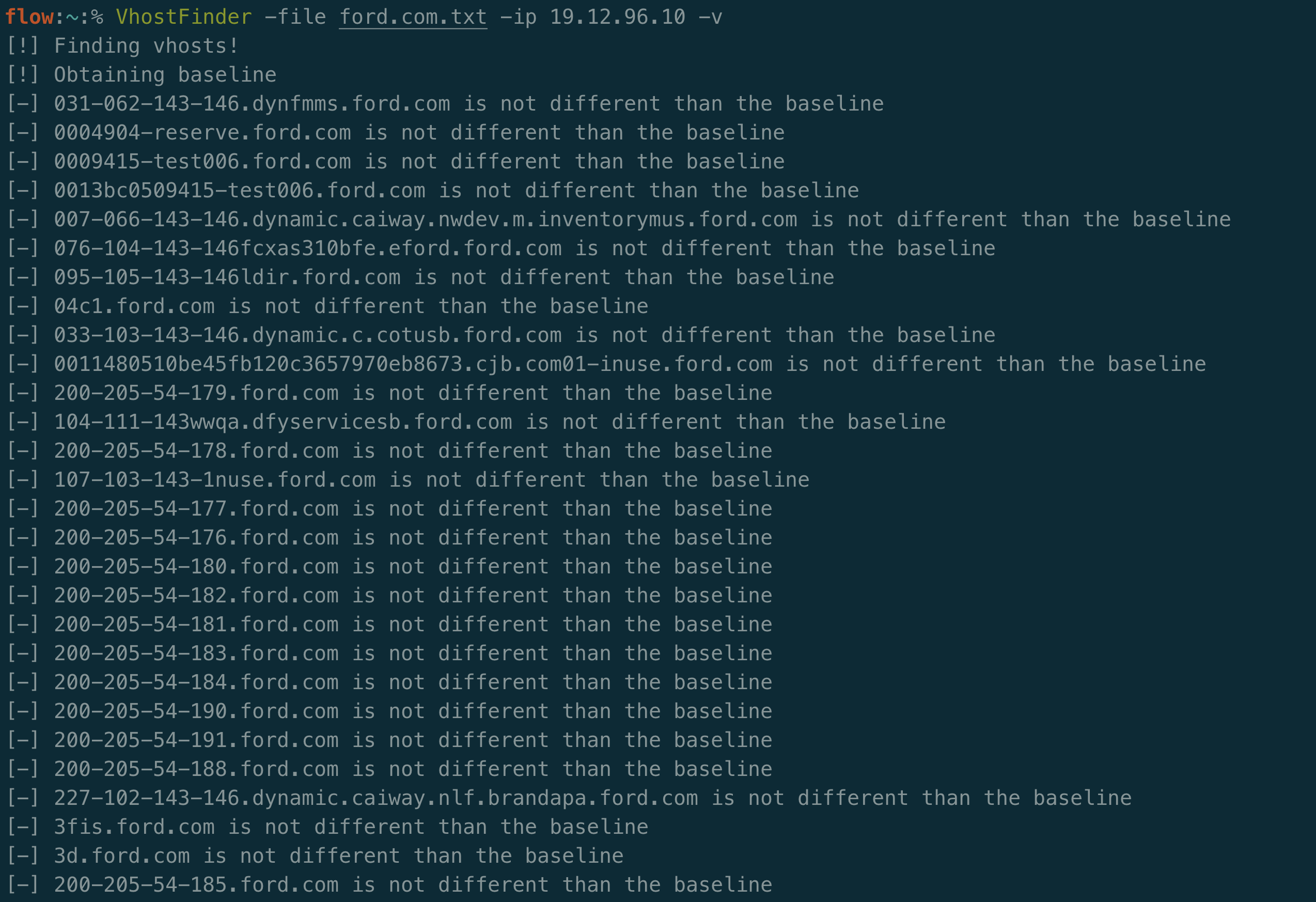

I wrote a tool named VhostFinder, which tests for exactly this. Virtual host fuzzing isn’t a new technique and there are already good tools out there that do it. The public tools didn’t work quite the way I wanted, so I made my own. It starts by testing for a random hostname to determine the default route. It then compares the response for each guess to that baseline. If there is a significant difference it considers that host to be a virtual host. As an addition, I added a -verify flag to check to see if the guessed response is different than requesting that domain over DNS. This can be used to ensure the results are only virtual hosts and not something you can already publicly talk to.

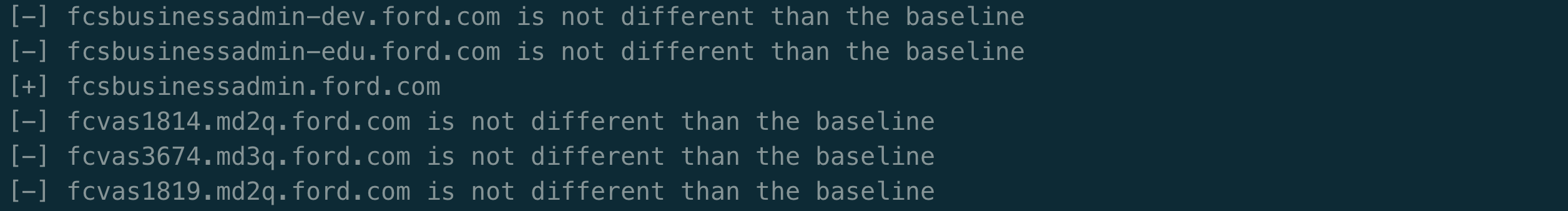

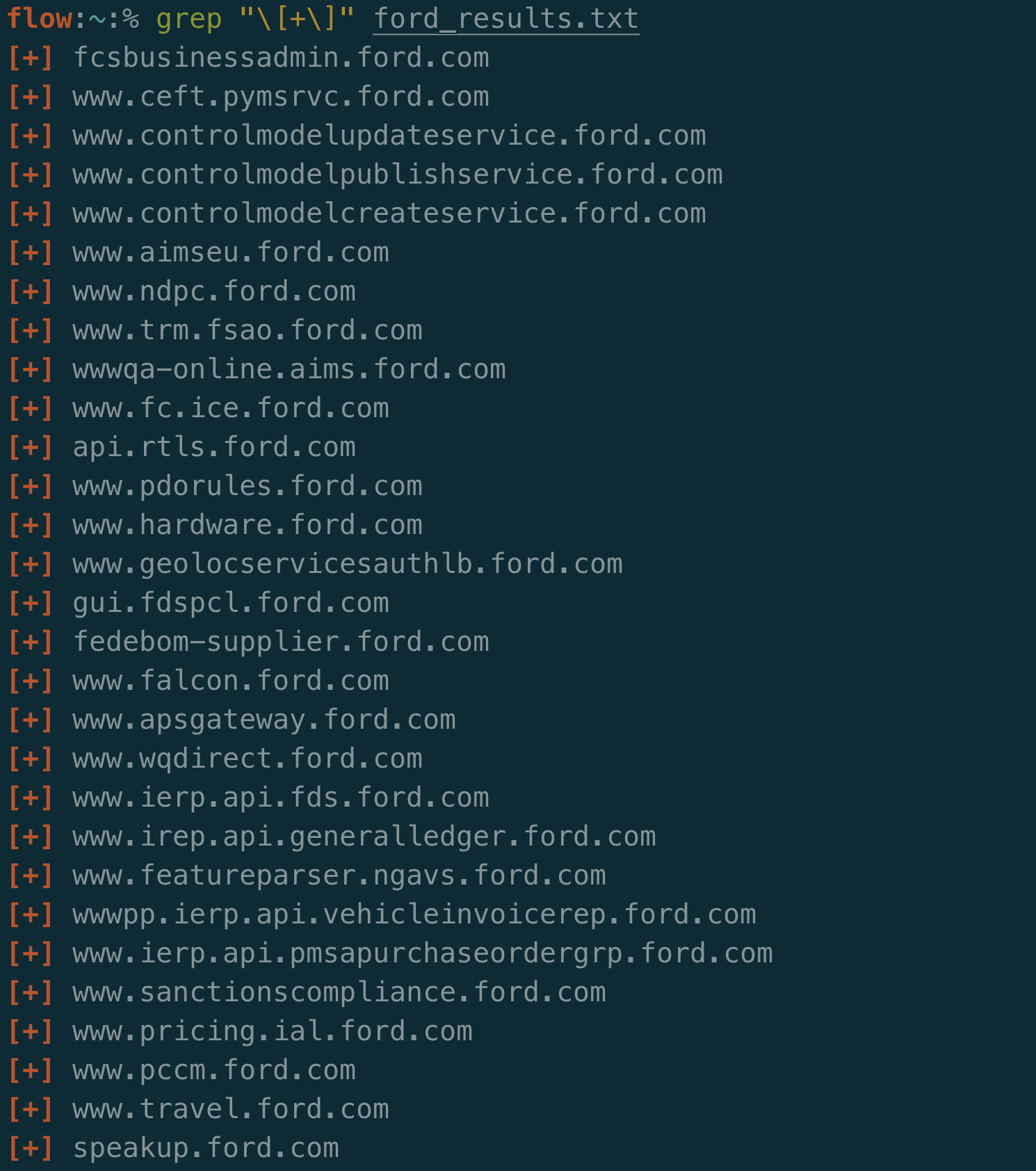

Eventually the results continue and we see the following:

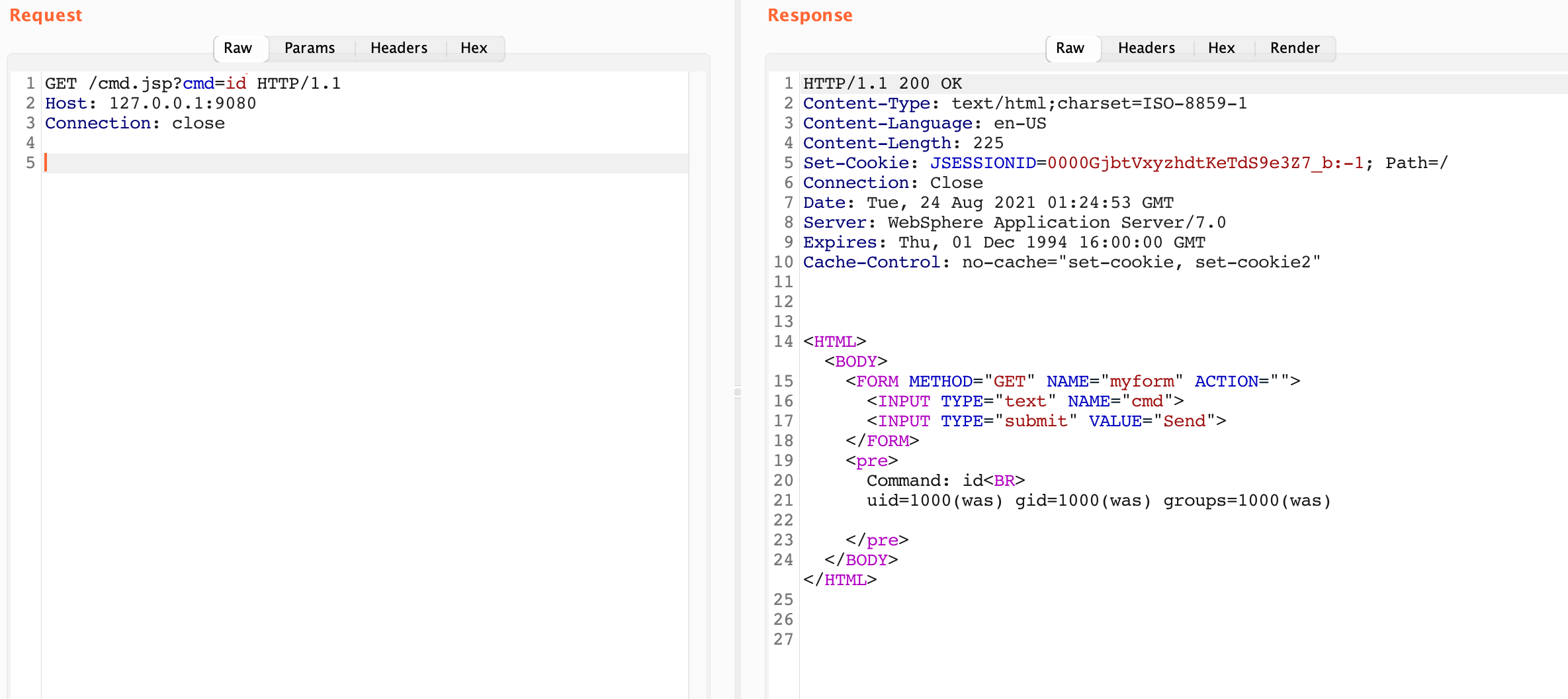

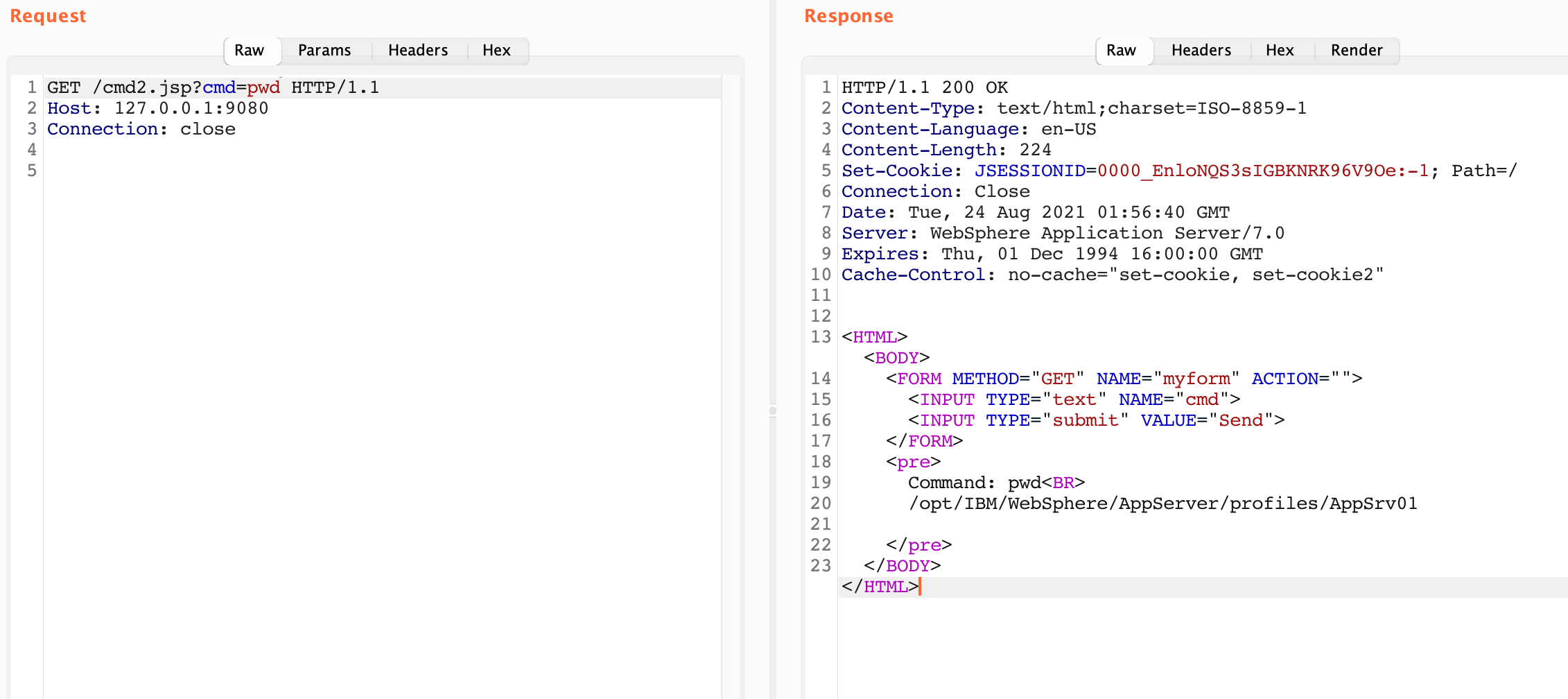

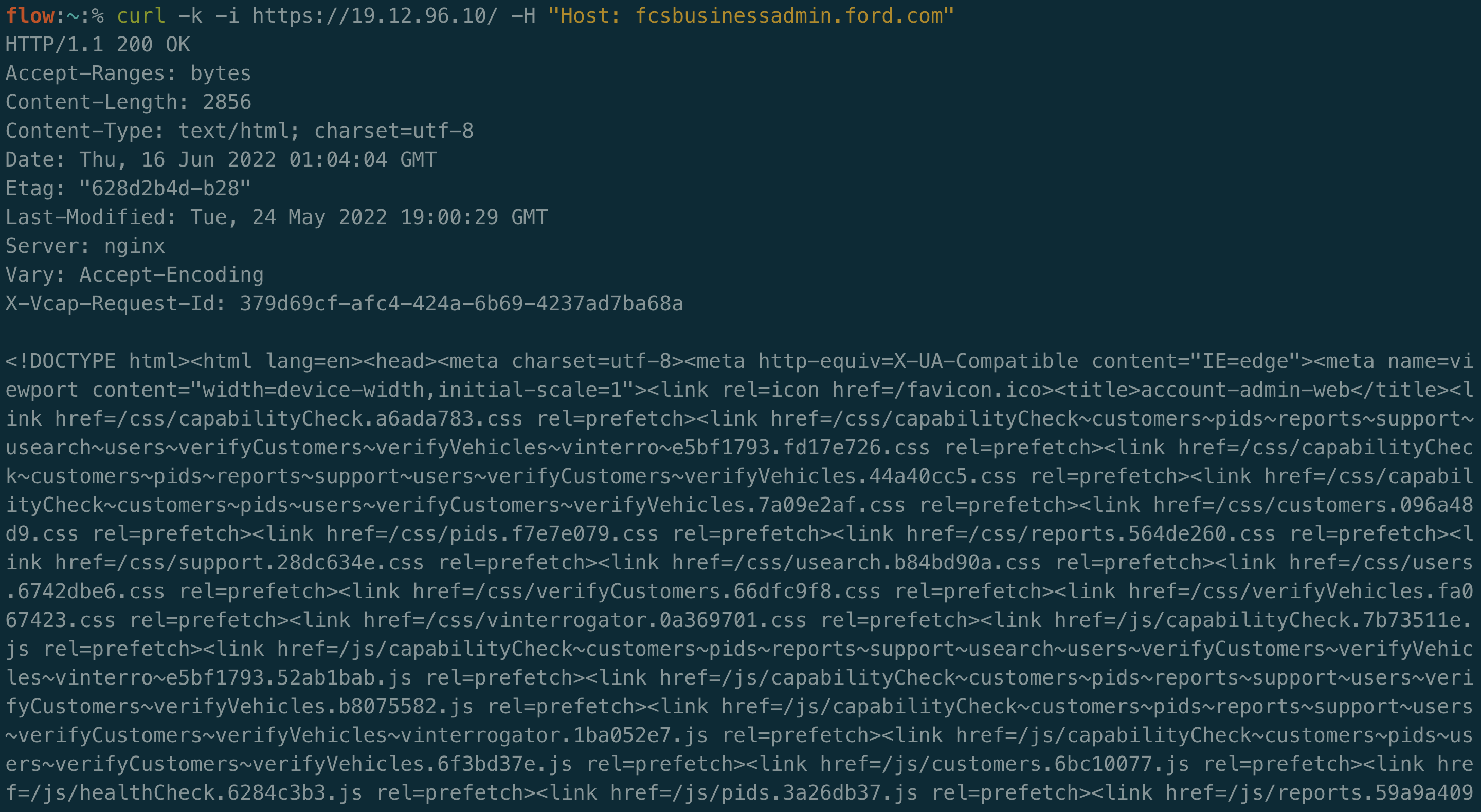

This indicates that fcsbusinessadmin.ford.com is a virtual host for this IP. If we test this out manually we can see that this is indeed correct:

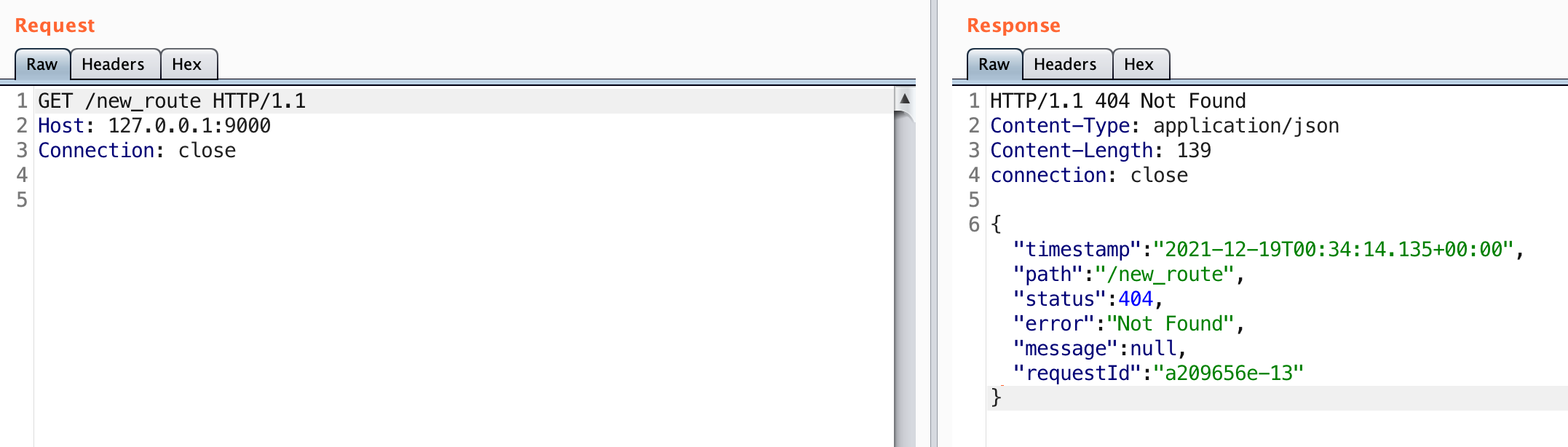

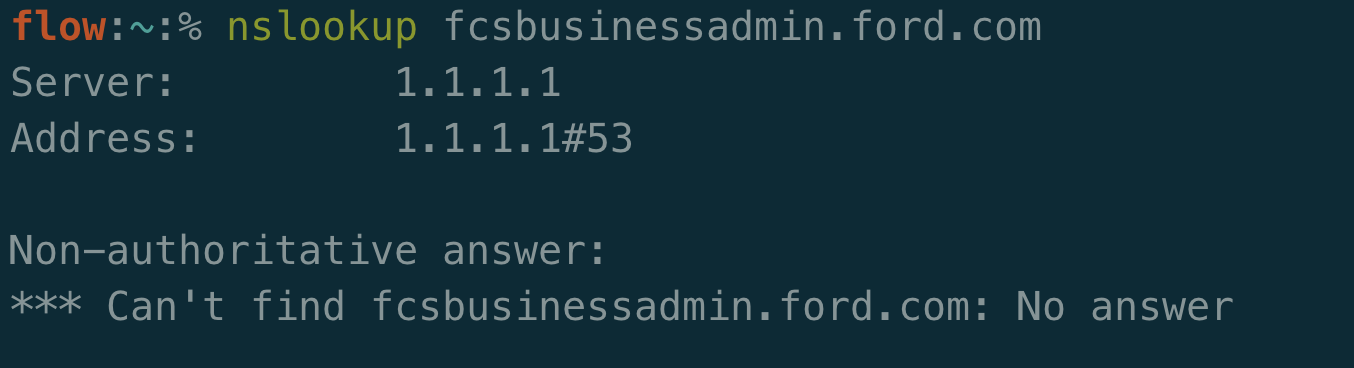

That’s really strange because if you perform an nslookup on the domain there is no A record associated with it:

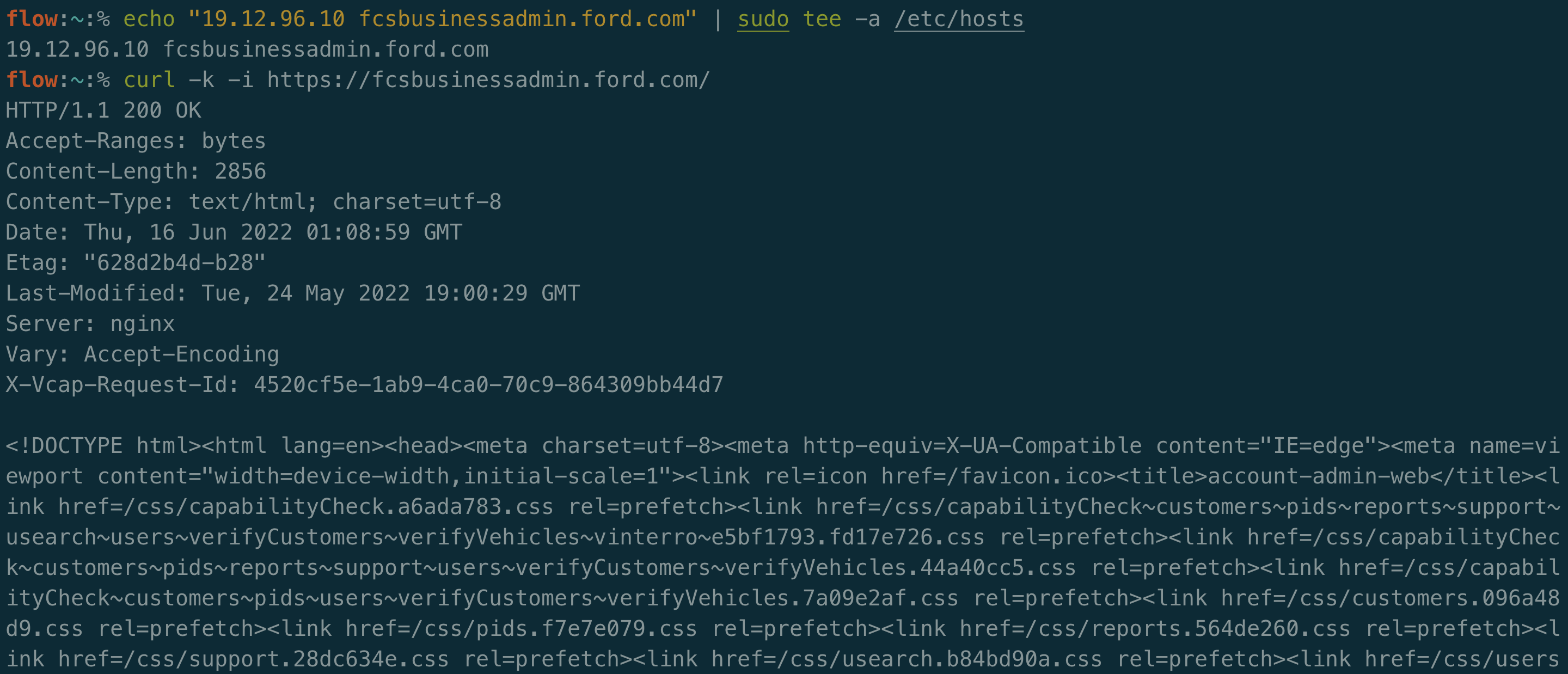

Why is this the case? Well Ford’s DNS team didn’t intend for fcsbusinessadmin.ford.com to be publicly facing. Due to the fact that it’s accessible in this PCF server, anyone with the knowledge of the hostname can manually set the Host header to the correct value to visit it. Alternatively, you can add an entry to your /etc/hosts file to set this mapping going forward:

From here you can go ahead and start testing the site normally for bugs.

Let’s look at the total results from VhostFinder:

I ended up with 384 unique virtual hosts associated with this IP that VhostFinder discovered using the Chaos dataset. That’s a nice list of additional targets to test considering DNS and certificate scraping didn’t work.

Wrapping Up

Virtual host enumeration is a great technique to have in your skillset. It’s often forgotten because it’s not as intuitive that virtual hosts exist compared to something like directory enumeration. In a network pentest this is crucial not to miss. If a company asks you to test a range of IPs, it’s possible there could be thousands of websites and APIs behind a single IP. If you forget to check for this you could be missing significant coverage.

PCF is a technology that is easily susceptible to virtual host enumeration. Not all deployment softwares work this way or respond as nicely. Load balancers can often be vulnerable to the same issue.

Try out different servers to see what works and what doesn’t. Ask bold questions such as would a cloud provider or CDN route domains in the same way? Perhaps you can find additional services where others have not.

On the defensive side, it would be a healthy checkup to ensure your routeable domains match up with your DNS names. If not, figure out if a host really needs to be exposed. Don’t let DNS be a lie (security by obscurity isn’t a good operating model). In terms of mitigation, you can rate limit by IP to slow an attack. Most WAFs do provide protection for directory enumeration, but they typically do not provide protection against virtual host enumeration.

I hope you enjoyed this blog and learned a bit about virtual host enumeration and PCF. I’d love to hear if you have any cool stories (like tens to hundreds of findings at once) from testing this out.